The subsequent era of AI might be powered by Nvidia {hardware}, the corporate has declared after it revealed its subsequent era of GPUs.

Firm CEO Jensen Huang took the wraps off the brand new Blackwell chips at Nvidia GTC 2024 in the present day, promising a significant step ahead by way of AI energy and effectivity.

The primary Blackwell “superchip”, the GB200, is about to ship later this yr, with the flexibility to scale up from a single rack all the way in which to a complete information middle, as Nvidia appears to be like to push on with its management within the AI race.

Nvidia Blackwell

Representing a big step ahead for the corporate’s {hardware} from its predecessor, Hopper, Huang famous that Blackwell comprises 208 billion transistors (up from 80 billion in Hopper) throughout its two GPU dies, that are related by 10 TB/second chip-to-chip hyperlink right into a single, unified GPU.

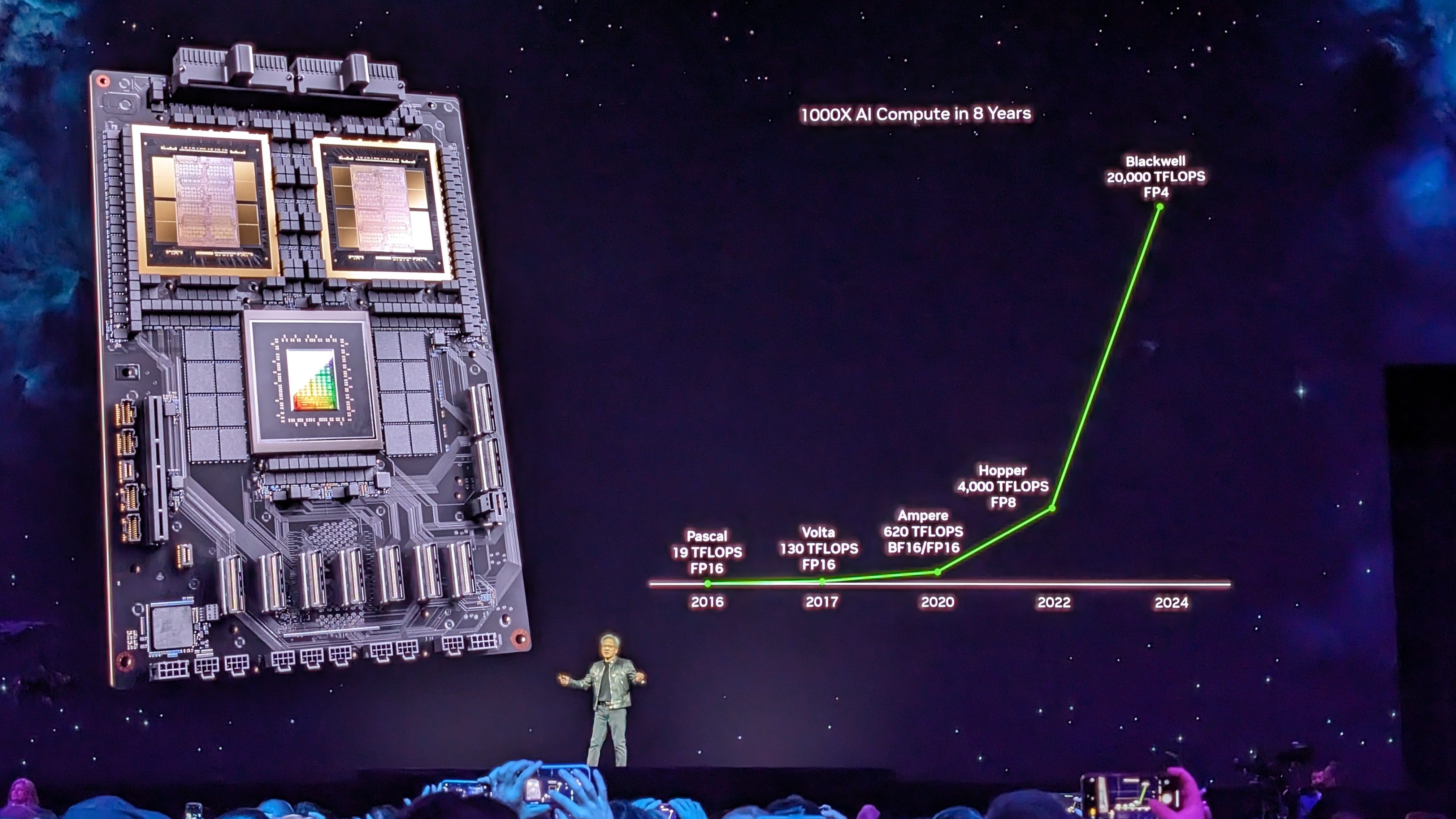

This makes Blackwell as much as 30x sooner than Hopper in relation to AI inference duties, providing as much as 20 petaflops of FP4 energy, far forward of the rest in the marketplace in the present day.

Throughout his keynote, Huang highlighted not solely the large leap in energy between Blackwell and Hopper – but additionally the foremost distinction in measurement.

“Blackwell’s not a chip, it’s the title of a platform,” Huang mentioned. “Hopper is improbable, however we’d like larger GPUs.”

Regardless of this, Nvidia says Blackwell can scale back value and power consumption by as much as 25x, giving the instance of coaching a 1.8 trillion parameter mannequin – which might beforehand have taken 8,000 Hopper GPUs and 15 megawatts of energy – however can now be achieved by simply 2,000 Blackwell GPUs consuming simply 4 megawatts.

The brand new GB200 brings collectively two Nvidia B200 Tensor Core GPUs and a Grace CPU to create what the corporate merely calls, “a large superchip” capable of drive ahead AI improvement, offering 7x the efficiency and 4 instances the coaching velocity of an H10O-powered system.

The corporate additionally revealed a next-gen NVLink community change chip with 50 billion transistors, which is able to imply 576 GPUs are capable of discuss to one another, creating 1.8 terabytes per second of bidirectional bandwidth.

Nvidia has already signed up a bunch of main companions to construct Blackwell-powered techniques, with AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure already on board alongside a bunch of huge business names.