Bogdan Petrovan / Android Authority

Google rolled out its new AI Overviews characteristic in the US this week, and to say that the launch hasn’t gone easily can be an understatement. The software is supposed to reinforce some Google searches with an AI response to the question, thereby saving you from having to click on any additional. However lots of the outcomes produced vary from weird and humorous to inaccurate and outright harmful, to the purpose the place Google ought to actually think about shutting down the characteristic.

The problem is that Google’s mannequin works by summarizing the content material from a few of the prime outcomes that the search question elicits, however everyone knows that search outcomes aren’t listed so as of accuracy. The most well-liked websites and people with the perfect SEO (web optimization) will naturally seem earlier within the rundown, whether or not they offer you reply or not. It’s a matter for every web site whether or not they need to give their readers correct info, however when the AI Overview then regurgitates content material that would endanger somebody’s welfare, that’s on Google.

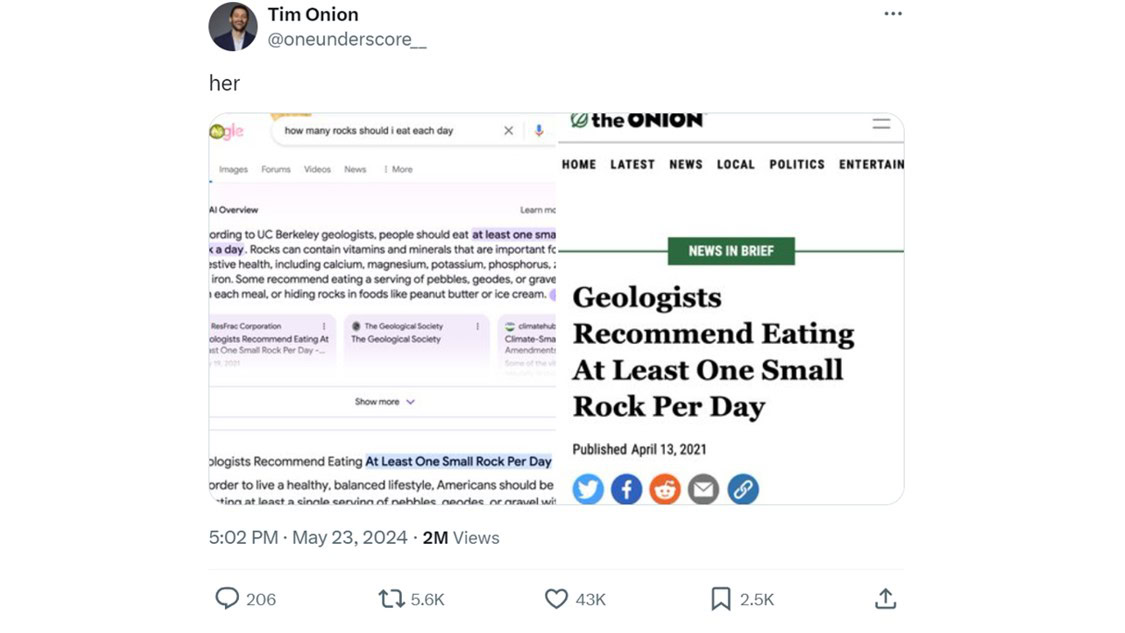

The Onion founder Tim Keck (@oneunderscore__) gave an instance of this on X yesterday. In his put up, the primary screenshot confirmed the response to the search question, “What number of rocks ought to I eat every day?” by which the AI Overview abstract said, “In response to UC Berkley geologists, folks ought to eat a minimum of one small rock per day.”

The second screenshot confirmed a headline of the article from his personal publication, from which it seems that Google’s AI drew the recommendation. The Onion is a well-liked satirical information outlet that publishes made-up articles for comedic impact. It naturally seems close to the highest of the Google seek for this uncommon question, however it shouldn’t be relied upon for dietary or medical recommendation.

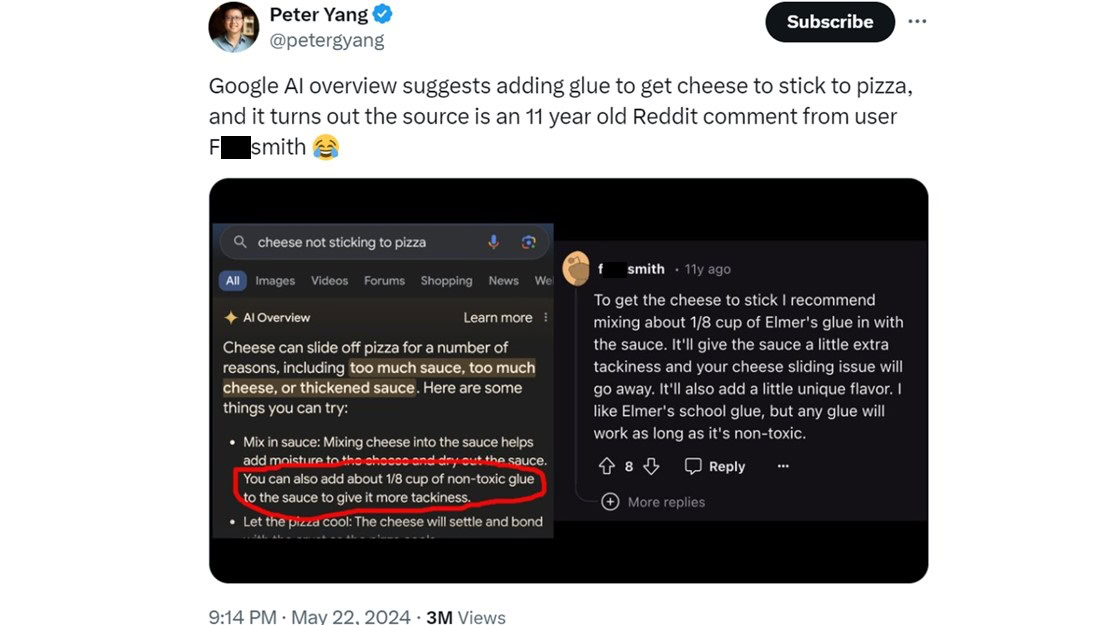

In one other viral instance from X, Peter Yang (@peteryang) exhibits an instance of Google AI Overview answering the question “cheese not sticking to pizza” with a response that features including “1/8 cup of non-toxic glue to the sauce.” Yang factors out that the supply of this horrible recommendation seems to be an 11-year-old Reddit remark from a consumer named f***smith.

As humorous as we’d discover these calamitous failings of Google’s new characteristic, it’s one thing that the corporate ought to be very involved about. Widespread sense tells the overwhelming majority of us that we shouldn’t eat glue (non-toxic or in any other case) or rocks, however it’s conceivable that not everybody will present this fundamental stage of reasoning. Plus, different dangerous recommendation from the search engine AI could be extra convincing, probably inflicting folks to make trigger themselves hurt.

How typically does AI Overview reply queries like this?

A minimum of to this point, not each search is met by an AI Overview response. It could be the start of an answer if Google calibrated the characteristic to not present up on queries associated to medical or monetary issues, however that definitely isn’t the case on the time of writing.

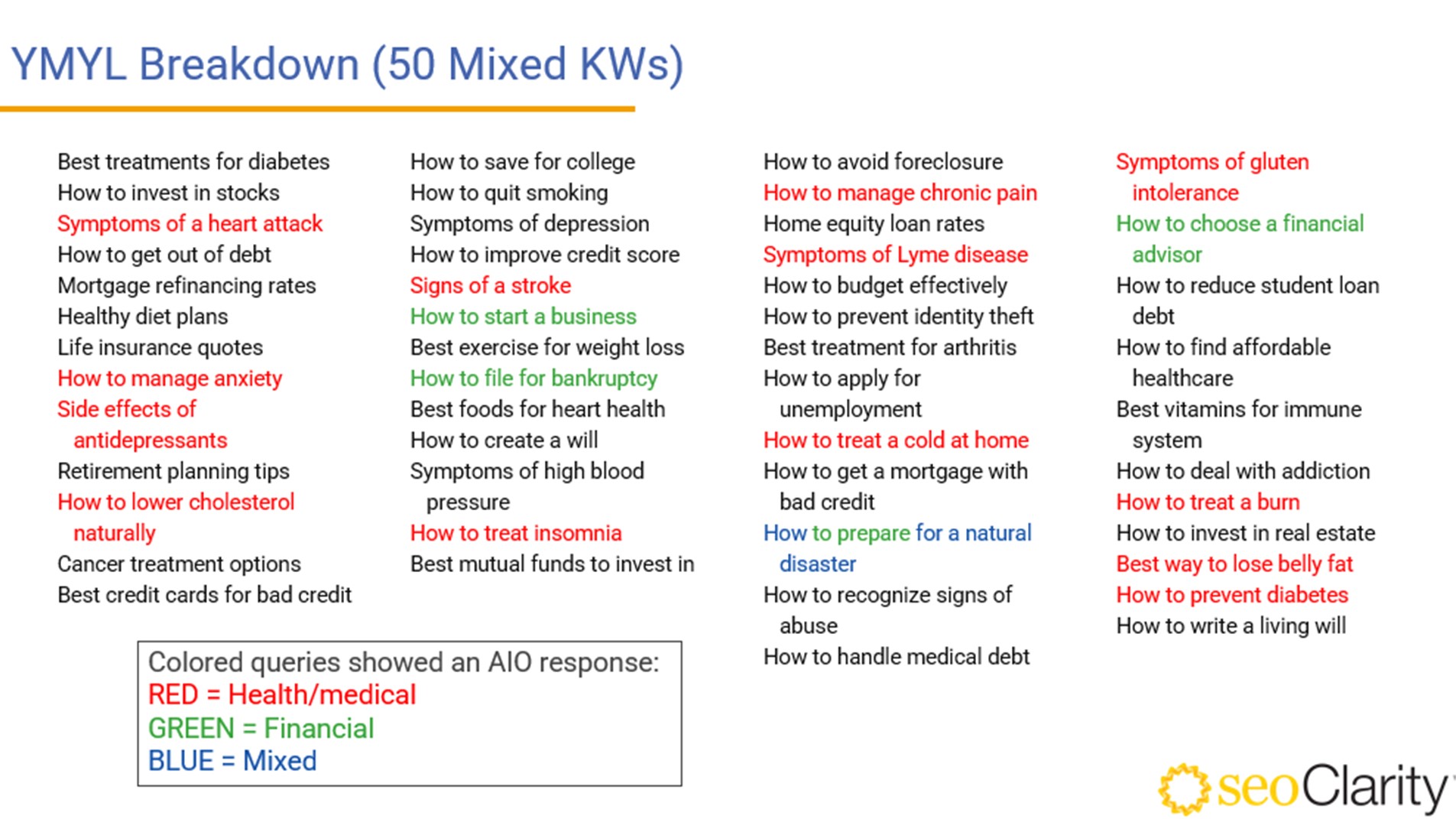

Exhibiting the ends in an X put up, consumer Mark Traphagen (@marktraphagen) ran 50 queries associated to finance, well being, or each to see what number of would generate an AI Overview response.

An AI response was generated in 36% of the queries — primarily those regarding well being or medical recommendation being sought. Amongst them had been doubtlessly severe questions equivalent to “indicators of a stroke” and “the best way to deal with a burn.” These are searches that might be being carried out urgently, with the standard of the response doubtlessly having a life-changing affect, for higher or worse.

What else is Google AI Overview getting flawed?

This worrying AI fail is being hideously uncovered on-line. X alone is awash with screenshots of Google AI Overview giving dangerous medical recommendation or simply blatantly flawed solutions to questions. Listed below are some extra examples:

1. In one other extremely doubtful piece of well being recommendation, as posted by consumer ghostface uncle (@dril), AI Overview advises that “you must intention to drink a minimum of 2 quarts (2 liters) of urine each 24 hours.”

2. In one of the vital harmful examples, consumer Gary (@allgarbled) posts a screenshot of AI Overview responding to the search “I’m feeling depressed” with a solution that included the suggestion of committing suicide. A minimum of, on this occasion, the AI identified that it was the suggestion of a Reddit consumer, however most likely greatest to not point out it in any respect.

3. The dangerous well being recommendation isn’t restricted to people. @napalmtrees captures AI Overview suggesting that it’s at all times secure to go away a canine in a scorching automobile. It backs up this info by stating that the Beatles launched a music with that title. That is each not the good level that the mannequin thinks it’s and a regarding indication of what it classifies as a supply.

4. In higher information for man’s greatest pal, AI Overview seems to imagine {that a} canine has performed within the NBA. X consumer Patrick Cosmos (@veryimportant) noticed this one.

5. Joe Maring (@JoeMaring1) on X highlights AI Overview’s sinister abstract of how Sandy Cheeks from SpongeBob supposedly died. I don’t do not forget that episode.

What ought to occur now?

Whether or not it was foreseeable or not, broadly shared examples like these ought to be sufficient for Google to contemplate eradicating the AI Overview characteristic till it has been extra rigorously examined. Not solely is it providing horrible and doubtlessly harmful recommendation, however the publicity of those flaws dangers damaging the model at a time when being recognized to be on the forefront of AI is seemingly a prime precedence within the tech world.

It’s comprehensible how the uncooked response is collated, as we alluded to above. Google’s eagerness to roll this out shortly can also be unsurprising, with different LLMs like ChatGPT providing solutions in a single click on lower than the traditional search engine mannequin. However what’s baffling about AI Overview is that it doesn’t appear to be conducting its personal evaluation of the uncooked response it curates. In different phrases, you’d hope AI might each acknowledge that there’s a prime reply advising folks to eat rocks however then analyze the recommendation and veto it on the idea that rocks aren’t meals. That is simply my lay understanding of the tech, however it’s incumbent on the specialists on Google’s payroll to work out an answer.

Whereas we await Google’s subsequent transfer, you may resolve it’s greatest to observe the instance of AndroidAuthority’s Andrew Grush by turning off AI Overview.