Google Imagen is the brand new AI text-to-image generator on the market. It has not been launched within the public area. However, whereas saying the brand new AI mannequin, the corporate has shared the analysis paper, a benchmarking instrument known as Drawbench to attract goal comparisons with Imagen’s rivals, and a few wacky photographs on your subjective pleasure. It additionally sheds mild on the potential harms of this tech.

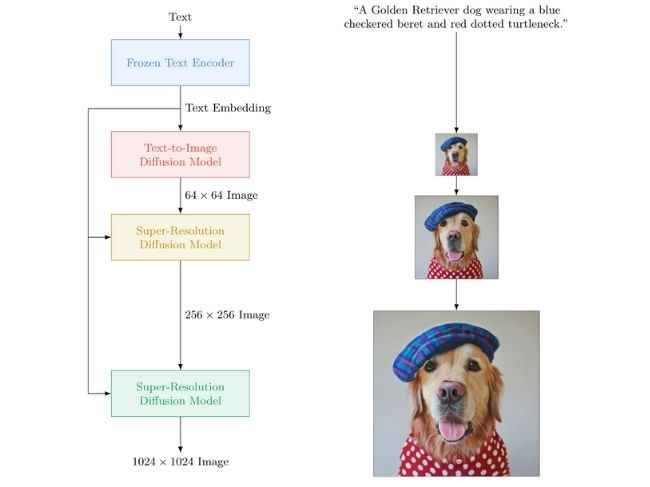

Google Imagen: Right here’s how a text-to-image-model works

The thought is that you just say what you need the AI picture generator to conjure up and it does precisely that.

The photographs proven off by Google are most certainly the perfect of the lot and for the reason that precise AI instrument isn’t accessible by most people, we propose you are taking the outcomes and claims with a grain of salt.

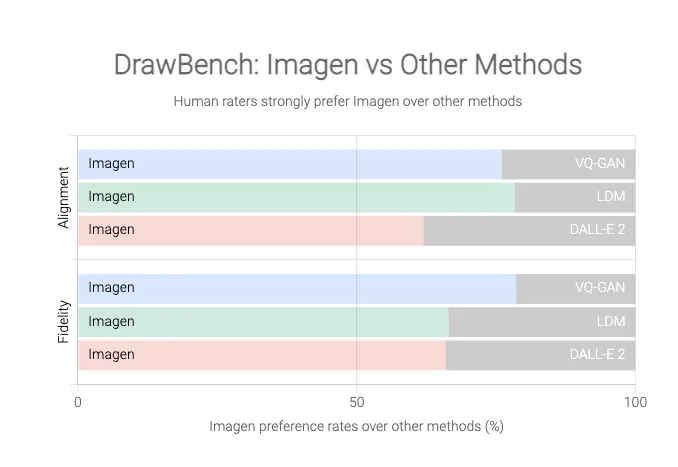

Regardless, Google is pleased with Imagen’s efficiency and maybe why it has launched a benchmark for AI text-to-image fashions known as DrawBench. For what it’s value, the graphs by Google reveal how a lot of a lead Imagen has over the alternate options like OpenAI’s Dall-E 2.

Now, similar to Open AI’s resolution or for that matter, any comparable functions have intrinsic flaws, that’s they’re liable to disconcerting outcomes.

Identical to ‘affirmation bias’ in people, which is our tendency to see what we imagine and imagine what we see, AI fashions that filter in massive quantities of knowledge may fall for these biases. That is again and again confirmed to be an issue with text-to-image mills. So will Google’s Imagen be any totally different?

In Google’s personal phrases, these AI fashions encode “a number of social biases and stereotypes, together with an general bias in the direction of producing photographs of individuals with lighter pores and skin tones and a bent for photographs portraying totally different professions to align with Western gender stereotypes”.

The Alphabet firm might all the time filter out sure phrases or phrases and feed good datasets. However with the dimensions of knowledge that these machines work on, not the whole lot may be sifted via, or not all kinks may be ironed out. Google admits to this by telling that “[T]he massive scale information necessities of text-to-image fashions […] have led researchers to rely closely on massive, largely uncurated, web-scraped dataset […] Dataset audits have revealed these datasets are inclined to replicate social stereotypes, oppressive viewpoints, and derogatory, or in any other case dangerous, associations to marginalized id teams.”

In order Google says, Imagen “isn’t appropriate for public use presently”. If and when it’s out there, let’s strive saying to it, “Hey Google Imagen there is not any heaven. It is simple for those who strive. No hell under us. Above us, solely sky”.

As for different information, evaluations, characteristic tales, shopping for guides, and the whole lot else tech-related, preserve studying Digit.in.