Briefly: As with many new revolutionary applied sciences, the rise of generative AI has introduced with it some unwelcome components. One in every of these is the creation of YouTube movies that includes AI-generated personas which might be used to unfold information-stealing malware.

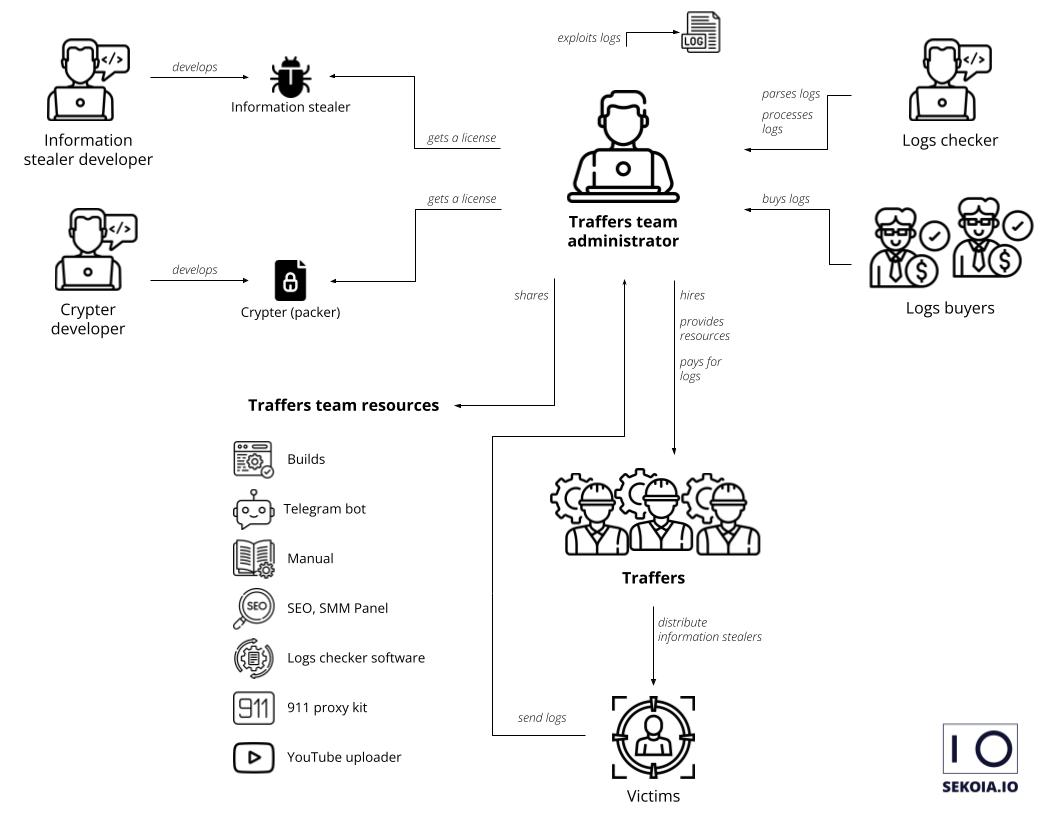

CloudSEK, a contextual AI firm that predicts cyberthreats, writes that since November 2022, there was a 200-300% month-on-month enhance in YouTube movies containing hyperlinks to stealer malware, together with Vidar, RedLine, and Raccoon.

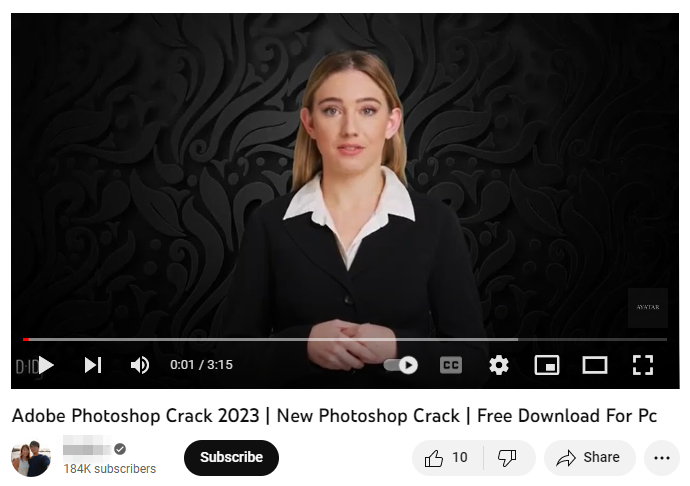

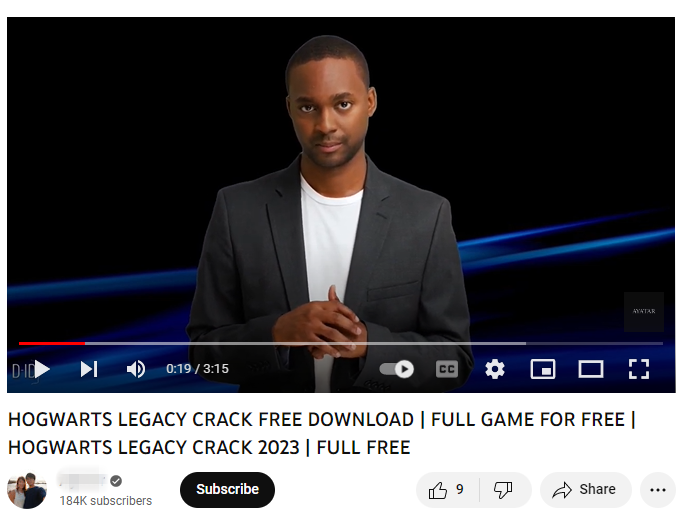

The movies attempt to tempt individuals into watching them by promising full tutorials on the right way to obtain cracked variations of video games and paid-for licensed software program comparable to Photoshop, Premiere Professional, Autodesk 3ds Max, and AutoCAD.

These kind of movies normally include little greater than display screen recordings or audio walkthroughs, however they’ve lately develop into extra refined by way of the usage of AI-generated clips from platforms comparable to Synthesia and D-ID, making them seem much less like scams in some individuals’s eyes.

CloudSEK notes that extra reliable firms are utilizing AI for his or her recruitment particulars, instructional coaching, promotional materials, and so on., and cybercriminals are following go well with with their very own movies that includes AI-generated personas with “acquainted and reliable” options.

Those that are tricked into believing the movies are the true deal and click on on the malicious hyperlinks usually find yourself downloading infostealers. As soon as put in, they will pilfer every thing from passwords, bank card data, and checking account numbers to browser knowledge, cryptowallet particulars, and system data, together with IP addresses. As soon as situated, the info is uploaded to the menace actor’s server.

This is not the primary time we have heard of YouTube getting used to ship malware. A 12 months in the past, safety researchers found that some Valorant gamers had been being deceived into downloading and operating software program promoted on YouTube as a sport hack, when in truth it was the RedLine infostealer being pushed within the generative-AI movies.

Sport cheats had been additionally used as a lure in one other malware marketing campaign unfold on YouTube in September. Once more, RedLine was the payload of selection.

Not solely does YouTube boast 2.5 billion energetic month-to-month customers, it is also the most well-liked platform amongst teenagers, making it an alluring prospect for cybercriminals who’ve been circumventing the platform’s algorithm and evaluation course of. One in every of these strategies is through the use of knowledge leaks, phishing strategies, and stealer logs to take over present YouTube accounts, normally in style ones with over 100,000 subscribers.

Different tips the hackers use to keep away from detection are location-specific tags, pretend feedback to make a video seem reliable, and together with an exhaustive record of tags that may deceive YouTube’s algorithm into recommending the video and guaranteeing it seems as one of many high outcomes. In addition they obfuscate the malicious hyperlinks within the descriptions by shortening them, linking to file internet hosting platforms, or making them immediately obtain the malicious zip file.