“Toxicity” is one of some phrases that has come to outline at this time’s web tradition, and for on-line recreation makers, it is arguably an excellent larger subject than curbing dishonest. Platoons of belief and security officers have been raised, and over 200 gaming firms, together with Blizzard, Riot, Epic Video games, Discord, and Twitch, have joined the “Honest Play Alliance,” the place they share notes on their battle to get gamers to be good to one another.

But for all their efforts, a 2023 report commissioned by Unity concluded that “poisonous habits is on the rise” in on-line video games. Now some firms are turning to the buzziest new tech round for an answer: machine studying and generative AI.

Again on the 2024 Recreation Builders Convention in March, I spoke to 2 software program makers who’re coming on the downside from totally different angles.

Ron Kerbs is head of Kidas, which makes ProtectMe, parental monitoring software program that hooks into PC video games and feeds voice chat, textual content chat, and even in-game occasions into machine studying algorithms designed to differentiate bullying and hate speech from playful banter, and to determine harmful habits just like the sharing of private info. If it detects an issue, it alerts dad and mom that they need to discuss to their child.

You do not have to be an eight-year-old Roblox participant with involved dad and mom to search out your self being watched by robots, although. Kidas additionally makes a ProtectMe Discord bot which hops into voice channels and alerts human mods if it detects foul play. And at GDC, I additionally spoke to Maria Laura Scuri, VP of Labs and Group Integrity at ESL Faceit Group, which for just a few years now has employed an “AI moderator” known as Minerva to implement the principles on the 30-million-user Faceit esports platform.

“Our concept was, effectively, if we have a look at sports activities, you usually have a referee in a match,” stated Scuri. “And that is the man that’s type of moderating and making certain that guidelines are revered. Clearly, it was unimaginable to consider having a human moderating each match, as a result of we had a whole lot of hundreds of concurrent matches occurring on the similar time. In order that’s after we created Minerva, and we began , ‘OK, how can we use AI to reasonable and deal with toxicity on the platform?'”

Faceit nonetheless employs “quite a bit” of human moderators, Scuri says, and Minerva is supposed to go with, not exchange, human judgment. It’s approved to take motion by itself, nonetheless: Something Minerva will get proper 99% of the time is one thing they’re prepared to let it implement by itself, and for the 1% likelihood of a false constructive, there is a human-reviewed attraction course of. For issues Minerva is extra prone to get incorrect, its observations are despatched to a human for overview first, after which the choice is fed again into the AI mannequin to enhance it.

Detecting unhealthy intentions

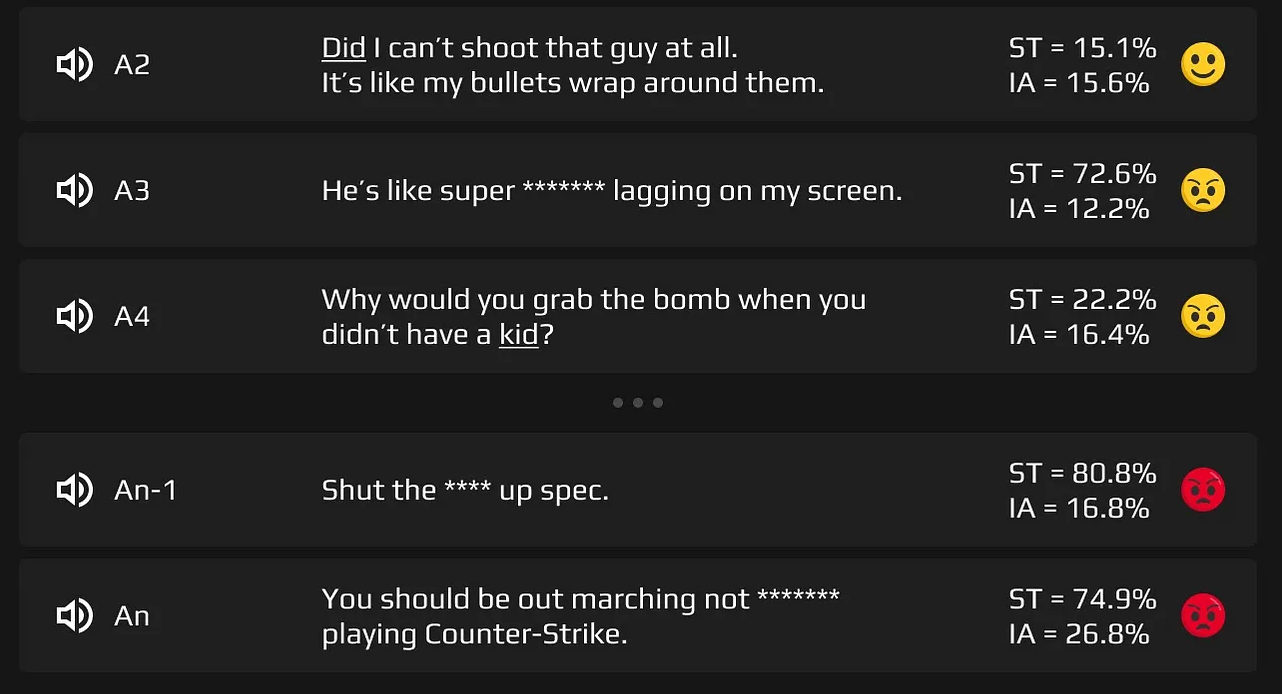

One factor that distinguishes ProtectMe and Minerva from the straightforward banned phrases lists of yesteryear is their potential to account for context.

“How are folks reacting to those messages and communications? As a result of possibly it is simply banter,” stated Scuri. “Perhaps it is identical to, cursing, as effectively. Would you actually wish to flag any person simply because they curse as soon as? Most likely not. I imply, we’re all adults. So we simply actually attempt to perceive the context after which perceive how the opposite folks reacted to it. Like, does all people fall silent? Or did any person else have a foul response? After which if that occurs, that is when [Minerva] would reasonable it.”

ProtectMe can also be involved with extra than simply what’s being stated in chat: It goals to determine variables like a speaker’s age, emotional state, and whether or not they’re a brand new acquaintance or somebody the child has beforehand talked to. “From the context of the dialog we are able to very precisely perceive if it is bullying, harassment, or simply one thing that’s a part of the sport,” says Kerbs.

Players are identified for his or her aptitude for breaking or bypassing any system you set in entrance of them, and “they’re very artistic” about getting round Minerva, says Scuri, however robo-mods additionally aren’t restricted to monitoring chat, both. Faceit skilled Minerva to detect behaviors like deliberately blocking one other participant’s motion, for example, to catch gamers who categorical their discontent with non-verbal griefing.

The newest frontier for Minerva is detecting ban evasion and smurfing. Scuri says that “truly actually poisonous” gamers—the one who’re are incorrigibly disruptive and cannot be reformed—are solely about 3-5% of Faceit’s inhabitants, however that small proportion of jackasses has an outsized impact on the entire playerbase, particularly since banning them does not at all times stop them from coming again beneath a brand new title.

Minerva is on the case: “We’ve got a number of knowledge factors that we are able to use to grasp how probably it’s that two accounts belong to the identical particular person,” stated Scuri. If a person seems to have two accounts, they’re going to be compelled to confirm one in all them and discontinue use of the opposite.

Eye within the sky

So, is all this AI moderation working? Sure, in response to Scuri. “Again in 2018, 30% of our churn was pushed by toxicity,” she stated, that means gamers reported it as the explanation they left the platform. “Now that we’re in 2024, we lowered that to twenty%. So we had 10 proportion factors lower, which was large on our platform.”

In the meantime, Kidas at present screens 2 million conversations per 30 days, in response to Kerbs, and “about 10 to fifteen%” of consumers get an alert throughout the first month. It is not at all times an excellent alarming incident that units the system off, however Kerbs says that 45% of alerts relate to non-public info, akin to a father or mother’s social safety or bank card quantity, being shared.

“I do not wish to create a spying machine or one thing like that. I wish to make dad and mom and youngsters really feel like they’ll speak about issues.”

Kidas CEO Ron Kerbs

“Youngsters who’re younger can not understand what’s personal info,” stated Kerbs, citing learnings from the Kids’s Hospital of Philadelphia, a supply of analysis for Kidas. “Their mind shouldn’t be developed sufficient, not less than within the younger ages—six, seven, eight—to separate between personal info and public info.”

I can not think about any father or mother would not wish to know if their child simply shared their bank card quantity with a stranger, however I additionally fear concerning the results of rising up in a parental panopticon, the place the majority of your social exercise—in a 2022 Pew Analysis report, 46% of youngsters stated they “nearly always” use the web—is being surveilled by AI sentinels.

Kerbs says he agrees that children deserve privateness, which is why ProtectMe analyzes the content material of messages to alert dad and mom with out sharing particular chat logs. His intent, he says, is to guard younger children and prepare them to know the way to reply to unhealthy actors—and that gives totally free V-bucks should not, actually, actual—in order that after they’re youngsters, their dad and mom are comfy uninstalling the software program.

“I do not wish to create a spying machine or one thing like that,” he stated. “I wish to make dad and mom and youngsters really feel like they’ll speak about issues.”

In the event that they’re as efficient as Kerbs and Scuri say, it is probably not lengthy earlier than you encounter a robo-mod your self (if you have not already), though approaches to the toxicity downside aside from surveillance are additionally being explored. Again at GDC, Journey designer Jenova Chen argued that toxicity is manifested by the design of on-line areas, and that there are higher methods to construct them. “As a designer, I am simply actually pissed that persons are so careless in terms of sustaining the tradition of an area,” he stated.

For video games like Counter-Strike 2 and League of Legends, although, I do not foresee the demand for a moderating presence reducing anytime quickly. Scuri in contrast Minerva to a referee, and I feel it is protected to say that, hundreds of years after their invention, bodily sports activities do nonetheless require referees to reasonable what you may name “toxicity.” One of many jobs of an NHL ref is actually to interrupt up fistfights, and the thought of an AI ref is not even restricted to esports: Main League Baseball has been controversially experimenting with robo-umps.