COMMENTARY

In 2023, there have been greater than 23,000 vulnerabilities found and disclosed. Whereas not all of them had related exploits, it has turn into an increasing number of frequent for there to be a proverbial race to the underside to see who might be the primary to launch an exploit for a newly introduced vulnerability. It is a harmful precedent to set, because it straight permits adversaries to mount assaults on organizations that will not have had the time or the staffing to patch the vulnerability. As a substitute of racing to be the primary to publish an exploit, the safety group ought to take a stance of coordinated disclosure for all new exploits.

Coordinated Disclosure vs. Full Disclosure

In easy phrases, coordinated disclosure is when a safety researcher coordinates with a vendor to alert them of a found vulnerability and provides them time to patch earlier than making their analysis public. Full disclosure is when a safety researcher releases their work to the wild with out restriction as early as doable. Nondisclosure (which, as a coverage, is not related right here) is the coverage of not releasing vulnerability info publicly, or solely sharing underneath nondisclosure settlement (NDA).

There are arguments for each side.

For coordinated vulnerability disclosure, whereas there is no such thing as a particular endorsed framework, Google’s susceptible disclosure coverage is a generally accepted baseline, and the corporate brazenly encourages use of its coverage verbatim. In abstract, Google adheres to the next:

-

Google will notify distributors of the vulnerability instantly.

-

90 days after notification, Google will publicly share the vulnerability.

The coverage does enable for exceptions, listed on Google’s web site.

On the complete disclosure facet, the justification for fast disclosure is that if vulnerabilities are usually not disclosed, then customers haven’t any recourse to request patches, and there’s no incentive for an organization to launch mentioned patch, thereby proscribing the flexibility of customers to make knowledgeable choices about their environments. Moreover, if vulnerabilities are usually not disclosed, malicious actors that at present are exploiting the vulnerability can proceed to take action with no repercussions.

There are not any enforced requirements for vulnerability disclosure, and subsequently timing and communication rely purely on the ethics of the safety researcher.

What Does This Imply for Us, As Defenders, When Dealing With Revealed Exploits?

I believe it is clear that vulnerability disclosure shouldn’t be going away and is an effective factor. In any case, customers have a proper to find out about vulnerabilities within the gadgets and software program of their environments.

As defenders, we now have an obligation to guard our clients, and if we wish to ethically analysis and disclose exploits for brand spanking new vulnerabilities, we should adhere to a coverage of coordinated disclosure. Hype, clout-chasing, and private model status are needed evils at this time, particularly within the aggressive job market. For unbiased safety researchers, getting visibility for his or her analysis is paramount — it will possibly result in job gives, educating alternatives, and extra. Being the “first” to launch one thing is a significant accomplishment.

To be clear, status is not the one purpose safety researchers launch exploits — we’re all keen about our work and generally identical to watching computer systems do the neat issues we inform them to do. From a company side, safety corporations have an moral obligation to comply with accountable disclosure — to do in any other case can be to allow attackers to assault the very clients we try to defend.

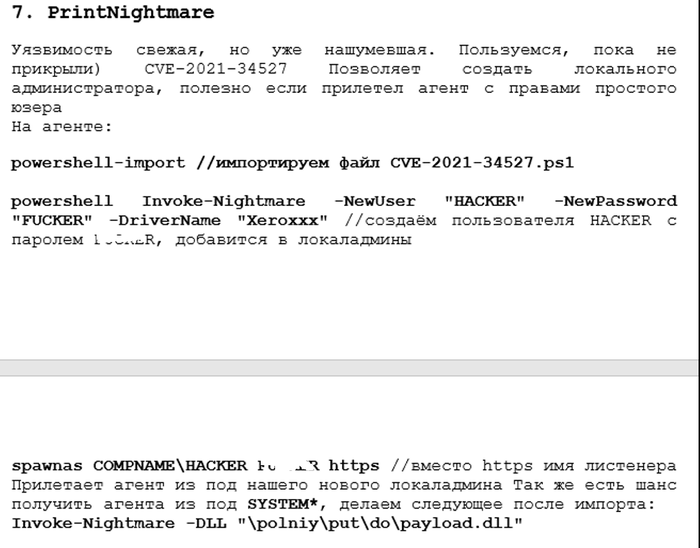

It is no secret that malicious actors monitor safety researchers, to the extent that menace actors have built-in researcher work into their toolkits (see Conti’s integration of PrintNightmare exploits):

This isn’t to say that researchers should not publish their work, however, ethically, they need to comply with the precept of accountable disclosure, each for vulnerabilities and for exploits.

We not too long ago noticed this across the ScreenConnect vulnerability — a number of safety distributors raced to publish exploits — some inside two days of the general public announcement of the vulnerability, as with this Horizon3 weblog submit. Two days shouldn’t be practically sufficient time for purchasers to patch vital vulnerabilities — there’s a distinction between consciousness posts and full deep dives on vulnerabilities and exploitation. A race to be the primary to launch an exploit does not accomplish something optimistic. There’s actually an argument that menace actors will engineer their very own exploit, which is true — however allow them to take the time to take action. The safety group doesn’t must make the attacker’s job simpler.

Exploits are supposed to be researched as a way to present an understanding of all of the potential angles that the vulnerability in query might be exploited within the wild.

The analysis for exploits, nevertheless, needs to be internally carried out and managed, however not publicly disclosed in a degree of element that advantages the menace actors trying to leverage the vulnerability, because of the frequency that publicly marketed analysis of exploits (Twitter, GitHub, and so on.) by way of well-known researchers and analysis companies, are monitored by these identical nefarious actors.

Whereas the analysis is important, the pace and element of disclosure of the exploit portion can do higher hurt and defeat the efficacy of menace intelligence for defenders, particularly contemplating the fact of patch administration throughout organizations. Sadly, for at the present time age within the present menace panorama, exploit analysis that’s made public, even with a patch, does higher hurt than good.