Anthropic has launched Claude 3.5 Sonnet, the most recent addition to its AI mannequin lineup, claiming it surpasses earlier fashions and rivals like OpenAI’s GPT-4 Omni. Accessible at no cost on Claude.ai and the Claude iOS app, the mannequin can also be accessible by way of the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. Claude 3.5 Sonnet is priced at $3 per million enter tokens and $15 per million output tokens, with a 200,000-token context window.

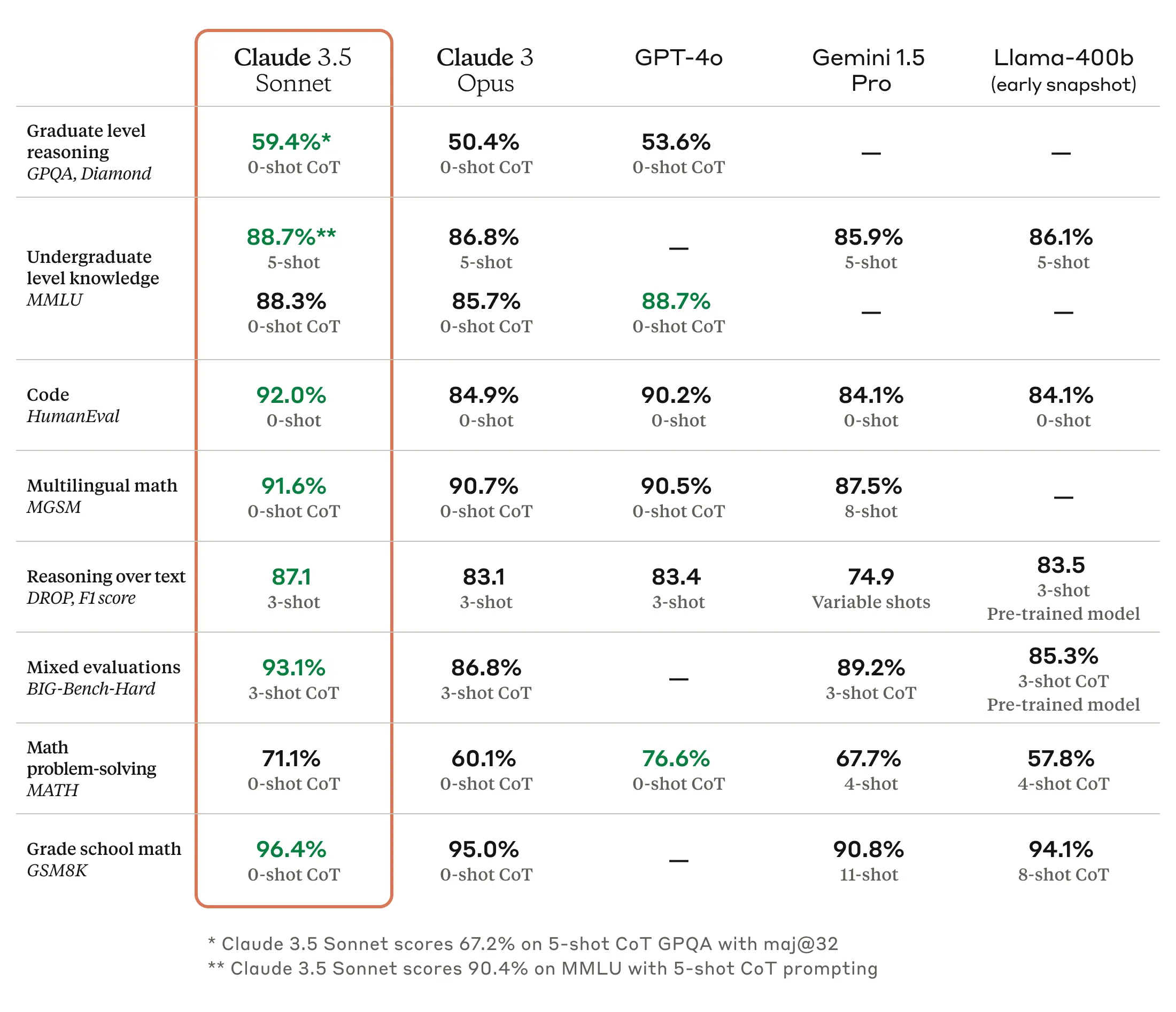

Claude 3.5 Sonnet units new benchmarks in graduate-level reasoning (GPQA), undergraduate-level information (MMLU), and coding proficiency (HumanEval). It demonstrates important enhancements in understanding nuance, humor, and sophisticated directions and excels at producing high-quality content material with a pure tone. The mannequin operates at twice the velocity of Claude 3 Opus, making it appropriate for complicated duties like context-sensitive buyer assist and multi-step workflows.

“In an inner agentic coding analysis, Claude 3.5 Sonnet solved 64% of issues, outperforming Claude 3 Opus, which solved 38%.”

The mannequin can independently write, edit, and execute code, making it efficient for updating legacy purposes and migrating codebases. It additionally excels in visible reasoning duties, resembling deciphering charts and graphs, and might precisely transcribe textual content from imperfect pictures, benefiting sectors like retail, logistics, and monetary providers.

Anthropic has additionally launched Artifacts, a brand new function on Claude.ai that permits customers to generate and edit content material like code snippets, textual content paperwork, or web site designs in actual time. This function marks Claude’s evolution from a conversational AI to a collaborative work setting, with plans to assist workforce collaboration and centralized information administration sooner or later.

Anthropic emphasizes its dedication to security and privateness, stating that Claude 3.5 Sonnet has undergone rigorous testing to cut back misuse. The mannequin has been evaluated by exterior specialists, together with the UK’s Synthetic Intelligence Security Institute (UK AISI), and has built-in suggestions from baby security specialists to replace its classifiers and fine-tune its fashions. Anthropic assures that it doesn’t practice its generative fashions on user-submitted information with out express permission.

Wanting forward, Anthropic plans to launch Claude 3.5 Haiku and Claude 3.5 Opus later this 12 months, together with new options like Reminiscence, which can allow Claude to recollect consumer preferences and interplay historical past.