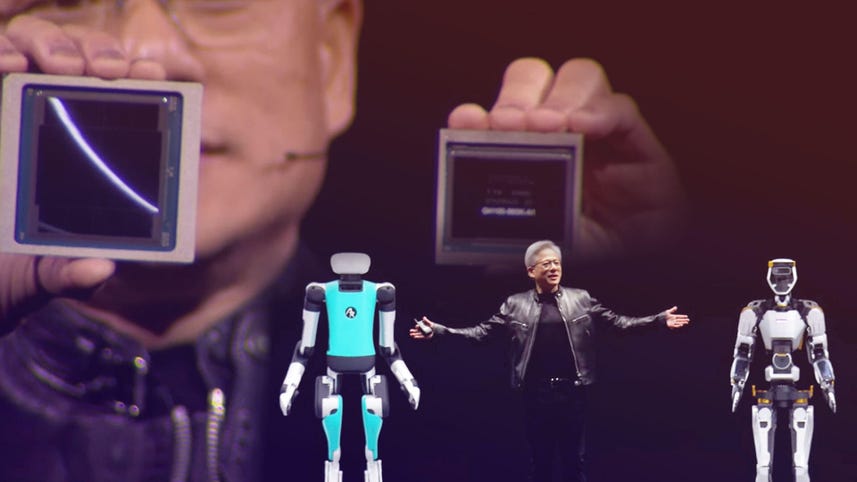

Speaker 1: I hope you notice this isn’t a live performance. You have got arrived at a developer’s convention. There will probably be a number of science described algorithms, laptop structure, arithmetic. Blackwell will not be a chip. Blackwell is the identify of a platform. Folks suppose we make GPUs and we do, [00:00:30] however GPUs do not look the best way they used to. That is Hopper. Hopper modified the world. That is Blackwell. It is okay. Hopper

Speaker 1: 208 billion transistors. And so you would see, I can see that there is [00:01:00] a small line between two dyes. That is the primary time two dyes have a button like this collectively in such a means that the 2 dyes suppose it is one chip. There’s 10 terabytes of information between it, 10 terabytes per second in order that these two sides of the Blackwell chip don’t have any clue which aspect they’re on. There isn’t any reminiscence locality points, no cache points. It is only one big chip and it goes into two sorts of programs. [00:01:30] The primary one, it is kind match perform suitable to hopper, and so that you slide all hopper and also you push in Blackwell. That is the rationale why one of many challenges of ramping goes to be so environment friendly. There are installations of hoppers all around the world they usually may very well be the identical infrastructure, identical design, the facility, the electrical energy, the thermals, the software program equivalent, push it proper again. And so it is a hopper model [00:02:00] for the present HGX configuration and that is what the second hopper appears to be like like this. Now it is a prototype board. This can be a totally functioning board and I am going to simply watch out right here. This proper right here is, I do not know, $10 billion.

Speaker 1: The second’s 5.

Speaker 1: It will get cheaper after that. So any prospects in [00:02:30] the viewers? It is okay. The grey CPU has a brilliant quick chip to chip hyperlink. What’s wonderful is that this laptop is the primary of its form the place this a lot computation, to start with suits into this small of a spot. Second, it is reminiscence coherent. They really feel like they’re only one huge blissful household engaged on one software collectively. We created a processor for the generative AI period and probably the most essential [00:03:00] components of it’s content material token era. We name it. This format is FP 4. The speed at which we’re advancing computing is insane and it is nonetheless not quick sufficient, so we constructed one other chip. This chip is simply an unbelievable chip. We name it the ENV hyperlink change. It is 50 billion transistors. It is nearly the scale of hopper all by itself. This change up has 4 ENV hyperlinks in it, every 1.8 [00:03:30] terabytes per second, and it has computation in it, as I discussed. What is that this chip for? If we have been to construct such a chip, we are able to have each single GPU discuss to each different GPU at full velocity on the identical time. You possibly can construct a system that appears like this.

Speaker 1: [00:04:00] Now, this method, this method is sort of insane. That is 1D GX. That is what A DGX appears to be like like. Now, simply so you understand, there are solely a pair, two, three exit flops machines on the planet as we converse, and so that is an exit flops AI system in a single single rack. I need to thank some companions which might be becoming a member of us on this. AWS [00:04:30] is gearing up for Blackwell. They are going to construct the primary GPU with safe ai. They’re constructing out a 222 exo flops system the place Cuda accelerating SageMaker ai, the place Cuda accelerating bedrock ai. Amazon Robotics is working with us utilizing Nvidia Omniverse and Isaac Sim. AWS Well being has Nvidia Well being built-in into it. So AWS has actually leaned into accelerated computing. Google is [00:05:00] gearing up for Blackwell. GCP already has a one lots of, H one lots of, T fours, L fours, an entire fleet of Nvidia Kuda GPUs, they usually lately introduced a Gemma mannequin that runs throughout all of it.

Speaker 1: We’re working to optimize and speed up each side of GCP. We’re accelerating knowledge proc for knowledge processing. Their knowledge processing engine, JAKs XLA, vertex AI and mu joco for robotics. So we’re working with Google and GCP throughout an entire bunch [00:05:30] of initiatives. Oracle is gearing up for Blackwell. Oracle is a superb accomplice of ours for Nvidia DGX Cloud, and we’re additionally working collectively to speed up one thing that is actually essential to a number of firms. Oracle Database, Microsoft is accelerating and Microsoft is gearing up for Blackwell. Microsoft, Nvidia has a large ranging partnership. We’re accelerating, might accelerating every kind of providers. Whenever you chat clearly and AI providers which might be in Microsoft Azure, it is very, very doubtless [00:06:00] NVIDIA’s within the again doing the inference and the token era. They constructed the most important Nvidia InfiniBand supercomputer, principally a digital twin of ours or a bodily twin of ours. We’re bringing the Nvidia ecosystem to Azure, Nvidia, DDRs Cloud to Azure.

Speaker 1: Nvidia Omniverse is now hosted in Azure. Nvidia healthcare is in Azure and all of it’s deeply built-in and deeply linked with Microsoft Material. A nim, it is a pre-trained mannequin, so it is fairly intelligent [00:06:30] and it’s packaged and optimized to run throughout NVIDIA’s set up base, which may be very, very giant. What’s incited is unbelievable. You have got all these pre-trained state-of-the-art open supply fashions. They may very well be open supply, they may very well be from certainly one of our companions. It may very well be created by us like Nvidia second. It’s packaged up with all of its dependencies. So Kuda, the correct model, co DNN, the correct model, tensor rt, LLM, distributing throughout the a number of GPUs, tri [00:07:00] and inference server, all utterly packaged collectively. It is optimized relying on whether or not you’ve a single GPU multi GPU or multi node of GPUs. It is optimized for that and it is linked up with APIs which might be easy to make use of.

Speaker 1: These packages, unbelievable our bodies of software program will probably be optimized and packaged and we’ll put it on an internet site and you may obtain it, you may take it with you, you may run it in any cloud, you may run it in your personal knowledge heart. You possibly can [00:07:30] run in workstations if it match. And all it’s important to do is come to ai.nvidia.com. We name it NVIDIA Inference microservice, however inside the corporate, all of us name it nims. We have now a service referred to as NEMO microservice that helps you curate the information, getting ready the information in order that you would train this onboard, this ai. You high-quality tune them and then you definitely guardrail it. You possibly can even consider the reply, consider its efficiency towards different examples. And so we’re successfully an AI [00:08:00] foundry we’ll do for you and the trade on ai, what TSMC does for us constructing chips. And so we go to it, go to TSMC with our huge concepts.

Speaker 1: They manufacture it and we take it with us. And so precisely the identical factor right here. AI Foundry and the three pillars are the NIMS NEMO microservice and DGX Cloud. We’re asserting that Nvidia AI Foundry is working with among the world’s nice firms. SAP generates 87% of the world’s international commerce. [00:08:30] Mainly the world runs on SAP, we run on SAP. Nvidia and SAP are constructing SAP, jewel Copilots utilizing Nvidia Nemo and DGX Cloud ServiceNow. They run 80, 85% of the world’s Fortune 500 firms run their folks and customer support operations on ServiceNow they usually’re utilizing Nvidia AI Foundry to construct ServiceNow help digital help. Cohesity backs up the world’s knowledge. They’re sitting on a gold mine [00:09:00] of information, lots of of exabytes of information. Over 10,000 firms. Nvidia AI Foundry is working with them, serving to them construct their Gaia generative AI agent. Snowflake is an organization that shops the world’s digital warehouse within the cloud and serves over 3 billion queries a day for 10,000 enterprise prospects.

Speaker 1: Snowflake is working with Nvidia AI Foundry to construct copilots with Nvidia Nemo [00:09:30] and NIMS NetApp. Practically half of the information on this planet are saved on-Prem on NetApp. Nvidia. AI Foundry helps them construct chatbots and copilots like these vector databases and retrievers with Nvidia Nemo and nims, and we now have an ideal partnership with Dell, all people who’s constructing these chatbots and generative ai. Whenever you’re able to run it, you are going to want an AI manufacturing unit [00:10:00] and no one is healthier at constructing end-to-end programs of very giant scale for the enterprise than Dell. And so anyone, any firm, each firm might want to construct AI factories. And it seems that Michael is right here. He is blissful to take your order. We want a simulation engine that represents the world digitally for the robotic in order that the robotic has a fitness center to go learn to be a robotic. We name that [00:10:30] digital world Omniverse, and the pc that runs Omniverse is known as OVX and OVX. The pc itself is hosted within the Azure Cloud.

Speaker 2: The way forward for heavy industries begins as a digital twin. The AI brokers serving to robots, staff and infrastructure navigate unpredictable occasions in complicated industrial areas will probably be constructed and evaluated first in subtle digital twins.

Speaker 1: When you join every thing collectively, it is insane [00:11:00] how a lot productiveness you will get and it is simply actually, actually fantastic. Rapidly, all people’s working on the identical floor reality. You do not have to change knowledge and convert knowledge, make errors. Everyone is engaged on the identical floor reality from the design division to the artwork departments, the structure division, all the best way to the engineering and even the advertising division. Right now we’re asserting that omniverse Cloud streams to the Imaginative and prescient Professional, and [00:11:30] it is vitally, very unusual that you simply stroll round digital doorways after I was getting out of that automobile and all people does it. It’s actually, actually fairly wonderful Imaginative and prescient Professional linked to omniverse portals, you into Omniverse. And since all of those CAD instruments and all these completely different design instruments at the moment are built-in and linked to omniverse, you may [00:12:00] have the sort of workflow actually unbelievable.

Speaker 3: That is Nvidia Venture Group, a basic objective basis mannequin for humanoid robotic studying. The group mannequin takes multimodal directions and previous interactions as enter and produces the subsequent motion for the robotic to execute. We developed Isaac Lab, a robotic studying software [00:12:30] to coach grit on Omniverse Isaac Sim, and we scale out with Osmo, a brand new compute orchestration service that coordinates workflows throughout DGX programs for coaching and OVX programs for simulation. The GR mannequin will allow a robotic to be taught from a handful of human demonstrations so it might assist with on a regular basis duties and emulate human motion simply by observing us all. This unbelievable intelligence [00:13:00] is powered by the brand new Jetson Thor robotics chips designed for gr, constructed for the longer term with Isaac Lab, Osmo and Groot. We’re offering the constructing blocks for the subsequent era of AI powered robotics,

Speaker 1: About [00:13:30] the identical measurement, the soul of Nvidia, the intersection of laptop graphics, physics, synthetic intelligence. All of it got here to bear at this second. The identify of that challenge, basic Robotics 0 0 3. I do know. Tremendous good, tremendous [00:14:00] good. Nicely, I believe we now have some particular visitors, will we? Hey guys, I perceive you guys are powered by Jetson. They’re powered by Jetsons, little Jetson Robotics computer systems inside. [00:14:30] They realized to stroll in Isaac Sim. Girls and gents, that is Orange and that is the well-known Inexperienced. They’re the BDX robots of Disney. Wonderful Disney analysis. Come on you guys. Let’s wrap up. Let’s go 5 issues. The place are you going? [00:15:00] I sit proper right here. Do not be afraid. Come right here, inexperienced. Hurry up. What are you saying? No, it is not time to eat. It isn’t time to eat. I am going to provide you with a snack in a second. Let me end up actual fast. Come on inexperienced. [00:15:30] Hurry up. Losing time. That is what we introduced to you at present. That is Blackwell. That is the wonderful, wonderful processors, env, hyperlink switches, networking programs, and the system design is a miracle. That is Blackwell, and this to me is what a GPU appears to be like like in my thoughts.