AI is taking the world by storm, and when you may use Google Bard or ChatGPT, you may as well use a locally-hosted one in your Mac. Here is find out how to use the brand new MLC LLM chat app.

Synthetic Intelligence (AI) is the brand new cutting-edge frontier of pc science and is producing fairly a little bit of hype within the computing world.

Chatbots – AI-based apps which customers can converse with as area specialists – are rising in recognition to say the least.

Chatbots have seemingly professional data on all kinds of generic and specialised topics and are being deployed in all places at a speedy tempo. One group – OpenAI – launched ChatGPT just a few months in the past to a shocked world.

ChatGPT has seemingly limitless data on nearly any topic and might reply questions in real-time that may in any other case take hours or days of analysis. Each corporations and staff have realized AI can be utilized to hurry up work by decreasing analysis time.

The draw back

Given all that nonetheless, there’s a draw back to some AI apps. The first disadvantage to AI is that outcomes should nonetheless be verified.

Whereas normally largely right, AI can present inaccurate or misleading knowledge which might result in false conclusions or outcomes.

Software program builders and software program corporations have taken to “copilots” – specialised chatbots which can assist builders write code by having AI write the define of features or strategies robotically – which might then be verified by a developer.

Whereas a fantastic timesaver, copilots may write incorrect code. Microsoft, Amazon, GitHub, and NVIDIA have all launched copilots for builders.

Getting began with chatbots

To know – a minimum of at a excessive degree how chatbots work, you should first perceive AI fundamentals, and particularly Machine Studying (ML) and Massive Language Fashions (LLMs).

Machine Studying is a department of pc science devoted to the analysis and growth of making an attempt to show computer systems to be taught.

An LLM is basically a pure language processing (NLP) program that makes use of large units of knowledge and neural networks (NNs) to generate textual content. LLMs work by coaching AI code on giant knowledge fashions, which then “be taught” from them over time – primarily changing into a website professional in a selected discipline based mostly on the accuracy of the enter knowledge.

The extra (and extra correct) the enter knowledge, the extra exact and proper a chatbot that makes use of the mannequin will likely be. LLMs additionally depend on Deep Studying whereas being skilled on knowledge fashions.

If you ask a chatbot a query, it queries its LLM for essentially the most applicable reply – based mostly on its studying and saved data of all topics associated to your query.

Primarily chatbots have precomputed data of a subject, and given an correct sufficient LLM and enough studying time, can present right solutions far quicker than most individuals can.

Utilizing a chatbot is like having an automatic crew of PhDs at your disposal immediately.

In January 2023, Meta AI launched its personal LLM referred to as LLaMA. A month later, Google launched its personal AI chatbot, Bard, which relies by itself LLM, LaMDA. Different chatbots have since ensued.

Generative AI

Extra just lately, some LLMs have discovered find out how to generate non-text-based knowledge akin to graphics, music, and even complete books. Corporations are keen on Generative AI to create issues akin to company graphics, logos, titles, and even digital film scenes which change actors.

For instance, the thumbnail picture for this text was generated by AI.

As a aspect impact of Generative AI, staff have turn into involved about shedding their jobs to automation pushed by AI software program.

Chatbot assistants

The world’s first industrial user-available chatbot (BeBot) was launched by Bespoke Japan for Tokyo Station Metropolis in 2019.

Launched as an iOS and Android app, BeBot is aware of find out how to direct you to any level across the labyrinth-like station, make it easier to retailer and retrieve your baggage, ship you to an information desk, or discover prepare occasions, floor transportation, or meals and outlets contained in the station.

It will probably even let you know which prepare platforms to move to for the quickest prepare trip to any vacation spot within the metropolis by journey length – all in just a few seconds.

The MLC chat app

The Machine Studying Compilation (MLC) undertaking is the brainchild of Apache Basis Deep Studying researcher Siyuan Feng, and Hongyi Jin in addition to others based mostly in Seattle and in Shanghai, China.

The thought behind MLC is to deploy precompiled LLMs and chatbots to client gadgets and net browsers. MLC harnesses the facility of client graphics processing models (GPUs) to speed up AI outcomes and searches – making AI inside attain of most trendy client computing gadgets.

One other MLC undertaking – Net LLM – brings the identical performance to net browsers and relies in activate one other undertaking – WebGPU. Solely machines with particular GPUs are supported in Net LLM because it depends on code frameworks that assist these GPUs.

Most AI assistants depend on a client-server mannequin with servers doing a lot of the AI heavy lifting, however MLC bakes LLMs into native code that runs instantly on the person’s system, eliminating the necessity for LLM servers.

Establishing MLC

To run MLC in your system, it should meet the minimal necessities listed on the undertaking and GitHub pages.

To run it on an iPhone, you may want an iPhone 14 Professional Max, iPhone 14 Professional, or iPhone 12 Professional with a minimum of 6GB of free RAM. You may additionally want to put in Apple’s TestFlight app to put in the app, however set up is restricted to the primary 9,000 customers.

We tried operating MLC on a base 2021 iPad with 64GB of storage, nevertheless it would not initialize. Your outcomes could differ on iPad Professional.

You can too construct MLC from sources and run it in your cellphone instantly by following the instructions on the MLC-LLM GitHub web page. You may want the git source-code management system put in in your Mac to retrieve the sources.

To take action, make a brand new folder in Finder in your Mac, use the UNIX cd command to navigate to it in Terminal, then fireplace off the git clone command in Terminal as listed on the MLC-LLM GitHub web page:

https://github.com/mlc-ai/mlc-llm.git and press Return. git will obtain all of the MLC sources into the folder you created.

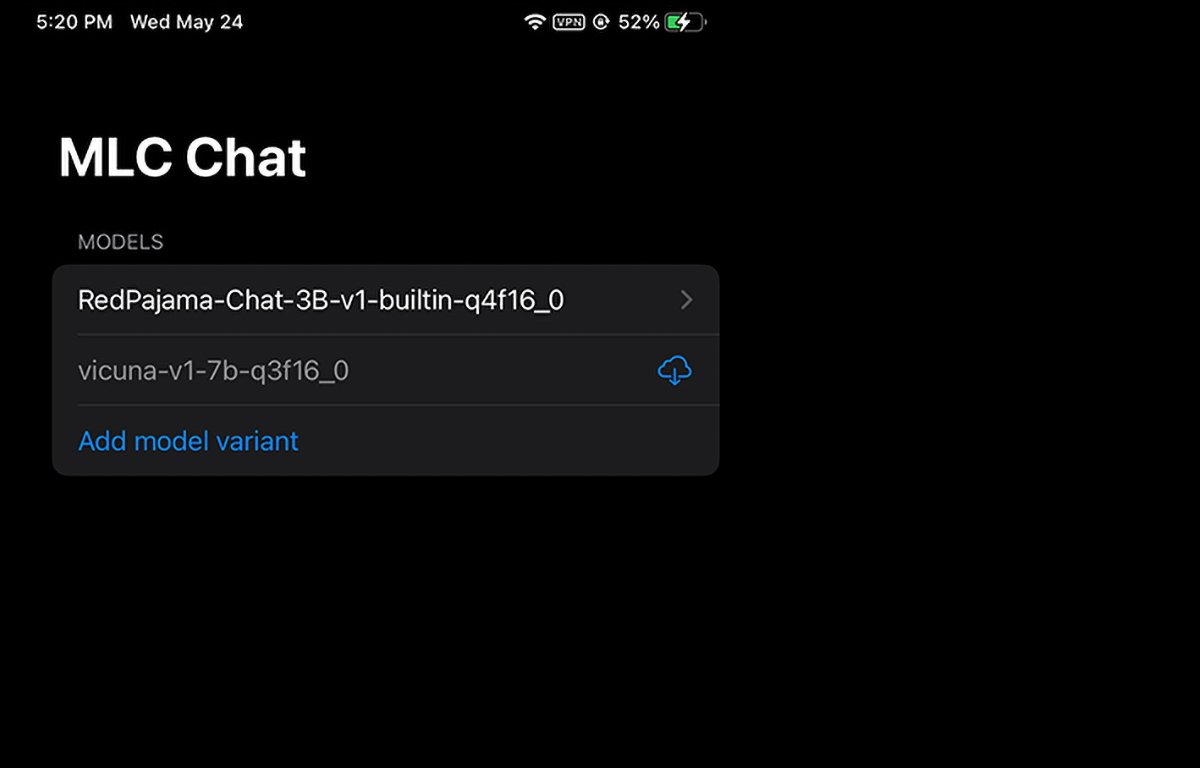

MLC operating on iPhone. You may choose or obtain which mannequin weight to make use of.

Putting in Mac conditions

For Mac and Linux computer systems, MLC is run from a command-line interface in Terminal. You may want to put in just a few conditions first to make use of it:

- The Conda or Miniconda Bundle Supervisor

- Homebrew

- Vulkan graphics library (Linux or Home windows solely)

- git giant file assist (LFS)

For NVIDIA GPU customers, the MLC directions particularly state you should manually set up the Vulkan driver because the default driver. One other graphics library for NVIDIA GPUs – CUDA – will not work.

For Mac customers, you possibly can set up Miniconda through the use of the Homebrew bundle supervisor, which we have lined beforehand. Be aware that Miniconda conflicts with one other Homebrew Conda formulation, miniforge.

So when you’ve got miniforge already put in through Homebrew, you may have to uninstall it first.

Following instructions on the MLC/LLM web page, the remaining set up steps are roughly:

- Create a brand new Conda setting

- Set up git and git LFS

- Set up the command-line chat app from Conda

- Create a brand new native folder, obtain LLM mannequin weights, and set a LOCAL_ID variable

- Obtain the MLC libraries from GitHub

All of that is talked about intimately on the directions web page, so we can’t go into each facet of setup right here. It could appear daunting initially, however when you’ve got primary macOS Terminal expertise, it is actually only a few simple steps.

The LOCAL_ID step simply units that variable to level to one of many three mannequin weights you downloaded.

The mannequin weights are downloaded from the HuggingFace neighborhood web site, which is form of a GitHub for AI.

As soon as every part is put in in Terminal, you possibly can entry MLC within the Terminal through the use of the mlc_chat_cli

Utilizing MLC in net browsers

MLC additionally has an online model, Net LLM.

The Net LLM variant solely runs on Apple Silicon Macs. It will not run on Intel Macs and can produce an error within the chatbot window when you attempt.

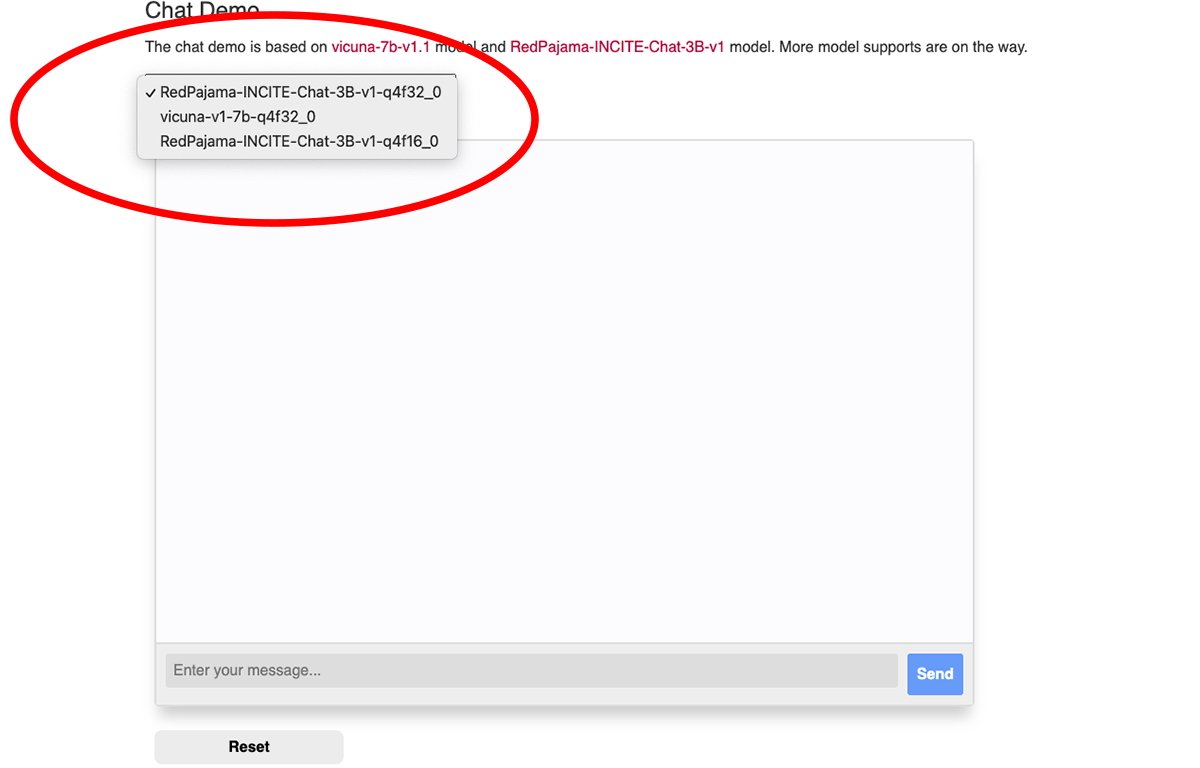

There is a popup menu on the high of the MLC net chat window from which you’ll choose which downloaded mannequin weight you need to use:

Choose one of many mannequin weights.

You may want the Google Chrome browser to make use of Net LLM (Chrome model 113 or later). Earlier variations will not work.

You may examine your Chrome model quantity from the Chrome menu within the Mac model by going to Chrome->About Google Chrome. If an replace is on the market, click on the Replace button to replace to the most recent model.

You could have to restart Chrome after updating.

Be aware that the MLC Net LLM web page recommends you launch Chrome from the Mac Terminal utilizing this command:

/Functions/Google Chrome.app/Contents/MacOS/Google Chrome — enable-dawn-features=allow_unsafe_apis,disable_robustness

allow_unsafe_apis’ and disable_robustness’ are two Chrome launch flags that enable it to make use of experimental options, which can or might not be unstable.

As soon as every part is ready up, simply sort a query into the Enter your message discipline on the backside of the Net LLM net web page’s chat pane and click on the Ship button.

The period of true AI and clever assistants is simply starting. Whereas there are dangers with AI, this expertise guarantees to boost our future by saving huge quantities of time and eliminating quite a lot of work.