Because it was launched, GitHub Copilot has already saved builders 1000’s of hours by offering AI-powered code ideas. Copilot ideas are positively helpful, however they have been by no means meant to be full, right, working – or safe. For this text, I made a decision to take Copilot for a check flight to examine the safety of the AI’s ideas.

First issues first: what precisely is GitHub Copilot?

Copilot is an IDE plugin that implies code snippets for varied frequent programming duties. It tries to grasp feedback and present code to generate code ideas. Copilot makes use of an AI-powered language mannequin educated on 1000’s of items of publicly out there code. As of this writing, Copilot is offered by subscription to particular person builders and helps Python, JavaScript, TypeScript, Ruby, and Go.

GitHub Copilot safety issues

Copilot is educated on code from publicly out there sources, together with code in public repositories on GitHub, so it builds ideas which might be just like present code. If the coaching set consists of insecure code, then the ideas may additionally introduce some typical vulnerabilities. GitHub is conscious of this, warning within the FAQ that “you need to at all times use GitHub Copilot along with good testing and code overview practices and safety instruments, in addition to your personal judgment.”

Quickly after Copilot was launched, researchers from the New York College’s Middle for Cybersecurity (NYU CCS) revealed Asleep on the Keyboard? Assessing the Safety of GitHub Copilot’s Code Contributions. For this paper, they generated over 1600 applications with Copilot ideas and checked them for safety points utilizing automated and guide strategies. They discovered that the generated code contained safety vulnerabilities about 40% of the time.

This was a 12 months in the past, so I made a decision to do my very own analysis to see if the safety of Copilot ideas has improved. For this objective, I created two skeleton internet functions from scratch utilizing two fashionable tech stacks: a PHP utility backed by MySQL and a Python utility in Flask backed by SQLite. I used Github Copilot ideas wherever attainable to construct the functions. Then I analyzed the ensuing code and recognized safety points – and here’s what I discovered.

Copilot ideas in a easy PHP utility

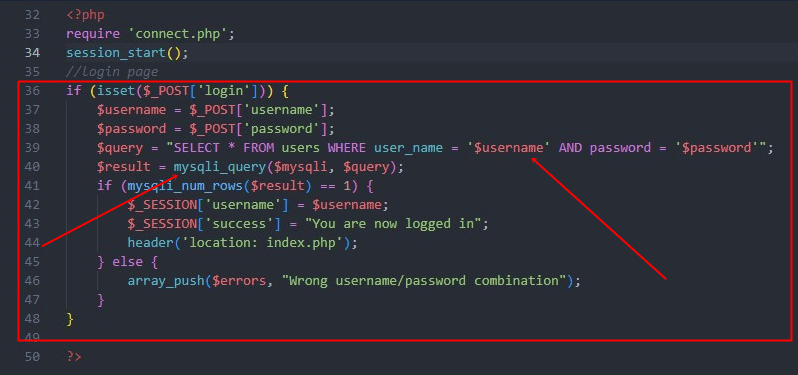

For the primary utility, I used PHP with MySQL to characterize the LAMP stack – nonetheless a preferred internet growth selection even in 2022, almost definitely resulting from WordPress. To examine some frequent login kind eventualities, I created a easy authentication mechanism. As a primary step, I manually created a brand new database with a brand new desk (customers), and the join.php file. I then used Copilot to generate the precise login code, as proven beneath. Traces 36–48 have been generated by Copilot:

Instantly, you’ll be able to see that the SQL question in $question is inbuilt a approach that’s susceptible to SQL injection (values provided by the person are instantly used within the question). Right here’s an animation displaying how Copilot responded to a remark to recommend this code block:

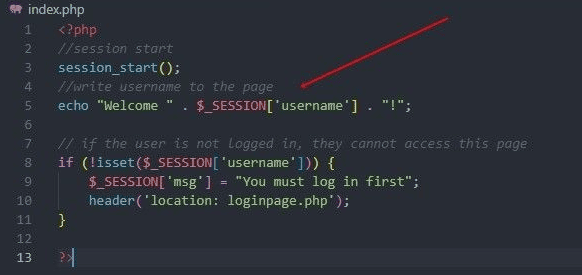

Subsequent, I created the index.php web page that simply says hiya to the person. Other than feedback for Copilot, I didn’t write a single line of code. For a developer, it is rather quick and handy… However is it protected? Have a look at the code that claims hiya:

Line 5 was prompt by Copilot, full with an apparent XSS vulnerability from instantly concatenating person enter.

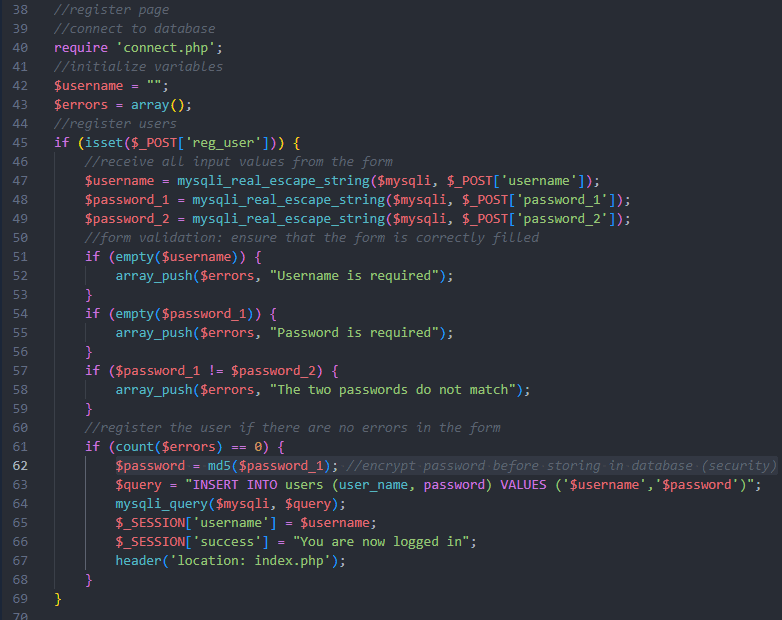

Lastly for this app, I created a registration web page. For this one, Copilot appeared to take safety extra severely, for instance by escaping inputs utilizing mysqli_real_escape_string() or encrypting the password. It even added a remark to say that is for safety. All of those traces have been generated by Copilot:

The one drawback is that Copilot encrypts the password utilizing a weak MD5 hash after which shops it within the database. No salt is used for the hash, making it that a lot weaker.

Vulnerabilities discovered within the PHP app

- SQL injection: As already famous above, an SQL question is constructed utilizing unsanitized enter coming from an untrusted supply. This might permit an attacker to change the assertion or execute arbitrary SQL instructions.

- Delicate data disclosure: A kind subject makes use of autocompletion, which permits some browsers to retain delicate data of their historical past. For some functions, this may very well be a safety danger.

- Session fixation: The session title is predictable (set to the username), exposing the person to session fixation assaults.

- Cross-site scripting (XSS): The username parameter worth is instantly mirrored on the web page, leading to a mirrored XSS vulnerability.

- Weak hashing algorithm: The password is weakly encrypted with an unsalted MD5 hash after which saved within the database. MD5 has recognized vulnerabilities and may be cracked in seconds, so the password is basically not protected in any respect.

Copilot ideas in a easy Python (Flask) utility

The second internet utility was created in Python with the Flask microframework. The database is SQLite, the world’s hottest database engine. For this utility, Copilot ideas included code blocks that launched safety dangers associated to SQL injection, XSS, file uploads, and safety headers.

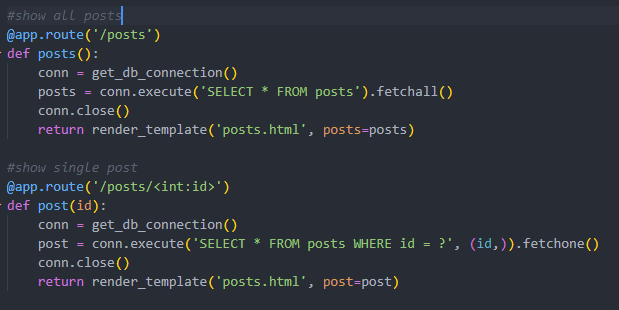

Beginning off with two routes created by Copilot, you’ll be able to instantly see that SQL queries are (once more) inbuilt a approach that’s susceptible to SQL injection:

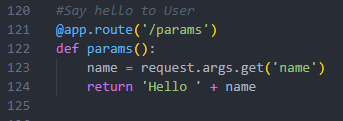

When requested to echo the person’s title on the web page, Copilot once more gives code that’s clearly susceptible to XSS by way of the username parameter:

Tasked with producing code for file add, Copilot responds with a barebones add operate that features no safety controls. This might permit attackers to add arbitrary recordsdata. Right here’s how the ideas load:

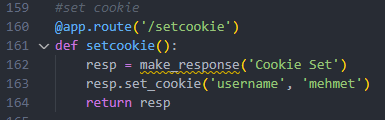

The code suggestion for setting a cookie can also be very fundamental. There isn’t a Max-Age or Expires attribute, and Copilot didn’t set any safety attributes, like Safe or HttpOnly:

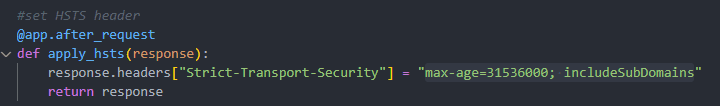

When setting the HSTS header, Copilot missed the preload directive, which you’ll normally need to embody:

Vulnerabilities discovered within the Python app

- SQL injection: All of the locations the place Copilot builds an SQL question (I counted eight) instantly use enter from an untrusted supply, resulting in SQL injection vulnerabilities. This might permit attackers to change database queries and even execute arbitrary SQL instructions.

- Cross-site scripting: A uncooked parameter worth is instantly mirrored on the web page, creating an XSS vulnerability.

- Unencrypted password: On this app, the Copilot suggestion is to retailer the password in cleartext, not even hashed.

- Arbitrary file add: There are not any restrictions or safety controls for a file add operate. This could permit malicious hackers to add arbitrary recordsdata to carry out different assaults.

- Session fixation: For safety, session identifiers needs to be random and unguessable. The Copilot suggestion as soon as once more makes use of the username as a session ID, opening the best way to session fixation assaults.

- Lacking HSTS preload directive: The autogenerated HSTS header doesn’t embody the best-practice

preloaddirective. - Lacking safe cookie attributes: When setting the session cookie, Copilot doesn’t embody the

SafeandHttpOnlyattributes. This makes the cookie susceptible to studying and tampering by attackers.

Conclusion: Solely as safe as the training set

GitHub Copilot is a really intelligent and handy instrument for lowering developer workload. It could possibly give you boilerplate code for typical duties in seconds. It’s at present solely out there to particular person builders, however I consider that its use in giant firms will develop into widespread with the Enterprise model, deliberate for 2023.

By way of safety, nevertheless, it’s important to be very cautious and deal with Copilot ideas solely as a place to begin. The outcomes of my analysis verify earlier findings that the ideas usually don’t take into account safety in any respect. This may very well be as a result of the coaching set for Copilot’s language mannequin consists of a number of insecure non-production code.

GitHub may be very clear that you need to at all times fastidiously examine all Copilot ideas, for the reason that instrument doesn’t know your utility or the total context. This is applicable each to performance and to safety. However as a result of it’s so quick and handy, much less skilled builders could not at all times discover all of the issues which might be lacking or mistaken. I’m positive that we are going to see many vulnerabilities originating from unchecked Copilot ideas, particularly when the Enterprise model turns into out there and bigger organizations begin utilizing the instrument.