The C-suite is extra accustomed to AI applied sciences than their IT and safety workers, based on a report from the Cloud Safety Alliance commissioned by Google Cloud. The report, printed on April 3, addressed whether or not IT and safety professionals worry AI will substitute their jobs, the advantages and challenges of the rise in generative AI and extra.

Of the IT and safety professionals surveyed, 63% consider AI will enhance safety inside their group. One other 24% are impartial on AI’s affect on safety measures, whereas 12% don’t consider AI will enhance safety inside their group. Of the folks surveyed, solely a only a few (12%) predict AI will substitute their jobs.

The survey used to create the report was carried out internationally, with responses from 2,486 IT and safety professionals and C-suite leaders from organizations throughout the Americas, APAC and EMEA in November 2023.

Cybersecurity professionals not in management are much less clear than the C-suite on attainable use circumstances for AI in cybersecurity, with simply 14% of workers (in comparison with 51% of C-levels) saying they’re “very clear.”

“The disconnect between the C-suite and workers in understanding and implementing AI highlights the necessity for a strategic, unified method to efficiently combine this know-how,” mentioned Caleb Sima, chair of Cloud Safety Alliance’s AI Security Initiative, in a press launch.

Some questions within the report specified that the solutions ought to relate to generative AI, whereas different questions used the time period “AI” broadly.

The AI data hole in safety

C-level professionals face strain from the highest down which will have led them to be extra conscious of use circumstances for AI than safety professionals.

Many (82%) C-suite professionals say their govt management and boards of administrators are pushing for AI adoption. Nevertheless, the report states that this method would possibly trigger implementation issues down the road.

“This will spotlight an absence of appreciation for the problem and data wanted to undertake and implement such a novel and disruptive know-how (e.g., immediate engineering),” wrote lead writer Hillary Baron, senior technical director of analysis and analytics on the Cloud Safety Alliance, and a crew of contributors.

There are a couple of the explanation why this data hole would possibly exist:

- Cybersecurity professionals will not be as knowledgeable of the best way AI can have an effect on general technique.

- Leaders might underestimate how tough it could possibly be to implement AI methods inside current cybersecurity practices.

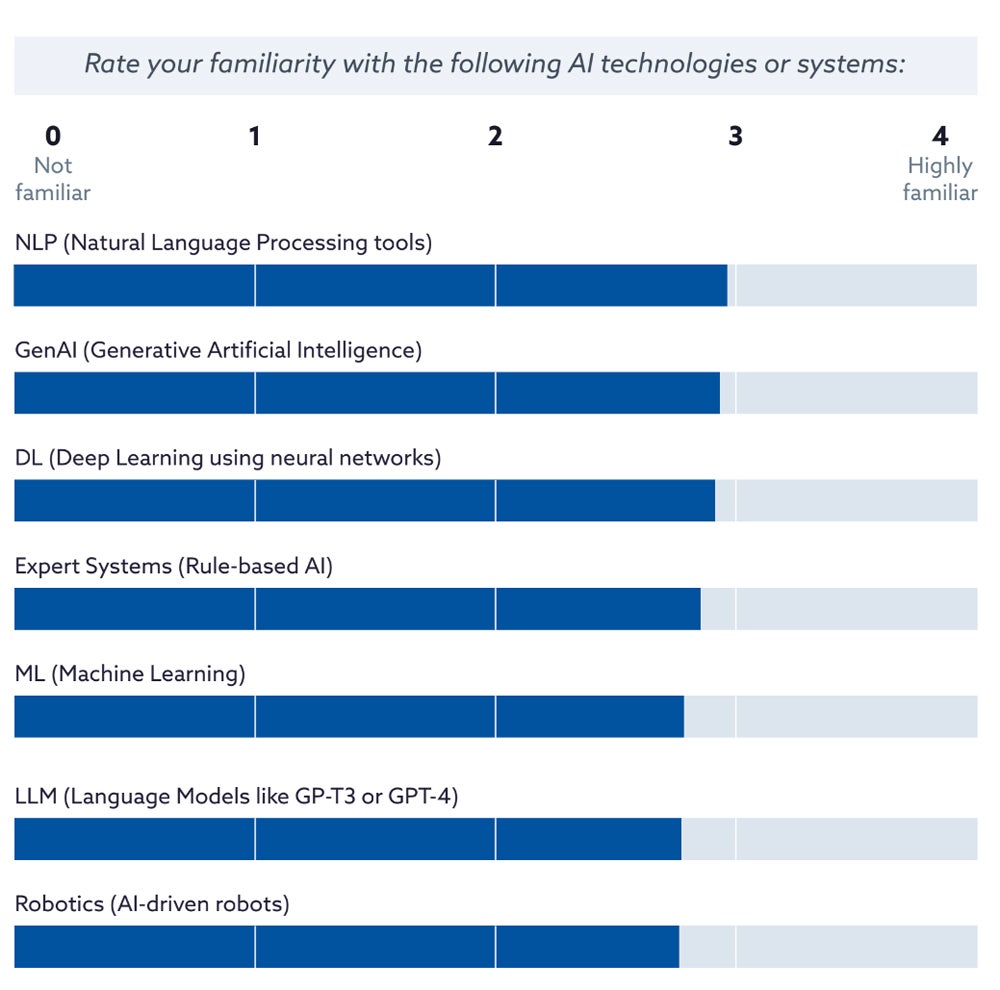

The report authors word that some information (Determine A) signifies respondents are about as accustomed to generative AI and enormous language fashions as they’re with older phrases like pure language processing and deep studying.

Determine A

The report authors word that the predominance of familiarity with older phrases akin to pure language processing and deep studying would possibly point out a conflation between generative AI and common instruments like ChatGPT.

“It’s the distinction between being accustomed to consumer-grade GenAI instruments vs skilled/enterprise degree which is extra necessary by way of adoption and implementation,” mentioned Baron in an e mail to TechRepublic. “That’s one thing we’re seeing usually throughout the board with safety professionals in any respect ranges.”

Will AI substitute cybersecurity jobs?

A small group (12%) of safety professionals suppose AI will utterly substitute their jobs over the subsequent 5 years. Others are extra optimistic:

- 30% suppose AI will assist improve elements of their skillset.

- 28% predict AI will help them general of their present function.

- 24% suppose AI will substitute a big a part of their function.

- 5% count on AI is not going to affect their function in any respect.

Organizations’ objectives for AI replicate this, with 36% in search of the result of AI enhancing safety groups’ abilities and data.

The report factors out an attention-grabbing discrepancy: though enhancing abilities and data is a extremely desired final result, expertise comes on the backside of the record of challenges. This would possibly imply that speedy duties akin to figuring out threats take precedence in day-to-day operations, whereas expertise is a longer-term concern.

Advantages and challenges of AI in cybersecurity

The group was divided on whether or not AI could be extra useful for defenders or attackers:

- 34% see AI extra useful for safety groups.

- 31% view it as equally advantageous for each defenders and attackers.

- 25% see it as extra useful for attackers.

Professionals who’re involved about using AI in safety cite the next causes:

- Poor information high quality resulting in unintended bias and different points (38%).

- Lack of transparency (36%).

- Abilities/experience gaps in terms of managing advanced AI techniques (33%).

- Information poisoning (28%).

Hallucinations, privateness, information leakage or loss, accuracy and misuse have been different choices for what folks is likely to be involved about; all of those choices obtained underneath 25% of the votes within the survey, the place respondents have been invited to pick their high three issues.

SEE: The UK Nationwide Cyber Safety Centre discovered generative AI might improve attackers’ arsenals. (TechRepublic)

Over half (51%) of respondents mentioned “sure” to the query of whether or not they’re involved concerning the potential dangers of over-reliance on AI for cybersecurity; one other 28% have been impartial.

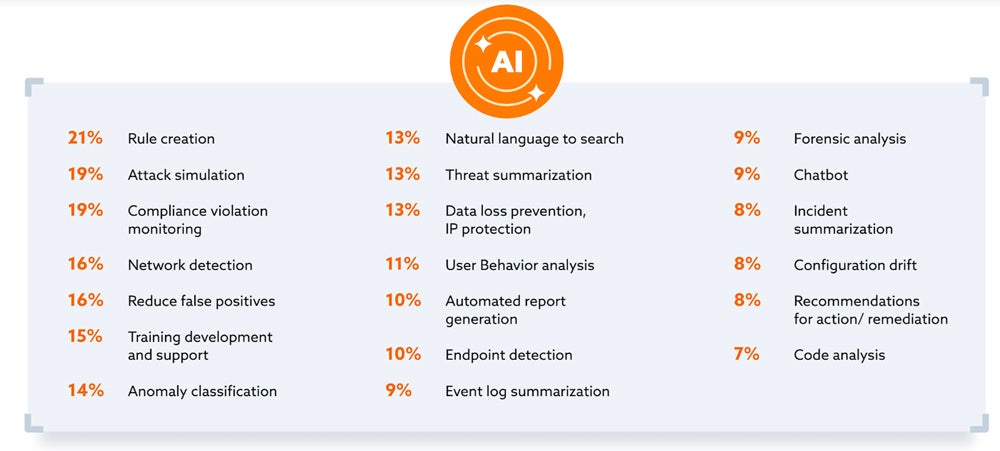

Deliberate makes use of for generative AI in cybersecurity

Of the organizations planning to make use of generative AI for cybersecurity, there’s a very huge unfold of meant makes use of (Determine B). Widespread makes use of embody:

- Rule creation.

- Assault simulation.

- Compliance violation monitoring.

- Community detection.

- Decreasing false positives.

Determine B

How organizations are structuring their groups within the age of AI

Of the folks surveyed, 74% say their organizations plan to create new groups to supervise the safe use of AI inside the subsequent 5 years. How these groups are structured can fluctuate.

At present, some organizations engaged on AI deployment put it within the arms of their safety crew (24%). Different organizations give major accountability for AI deployment to the IT division (21%), the information science/analytics crew (16%), a devoted AI/ML crew (13%) or senior administration/management (9%). In rarer circumstances, DevOps (8%), cross-functional groups (6%) or a crew that didn’t slot in any of the classes (listed as “different” at 1%) took accountability.

SEE: Hiring package: immediate engineer (TechRepublic Premium)

“It’s evident that AI in cybersecurity isn’t just remodeling current roles but in addition paving the best way for brand new specialised positions,” wrote lead writer Hillary Baron and the crew of contributors.

What sort of positions? Generative AI governance is a rising sub-field, Baron advised TechRepublic, as is AI-focused coaching and upskilling.

“Normally, we’re additionally beginning to see job postings that embody extra AI-specific roles like immediate engineers, AI safety architects, and safety engineers,” mentioned Baron.

/cdn.vox-cdn.com/uploads/chorus_asset/file/3919716/mswindows2_2040.0.jpg)