In a landmark transfer, the US Nationwide Institute of Requirements and Know-how (NIST) has taken a brand new step in growing methods to battle in opposition to cyber-threats that focus on AI-powered chatbots and self-driving vehicles.

The Institute launched a brand new paper on January 4, 2024, during which it established a standardized strategy to characterizing and defending in opposition to cyber-attacks on AI.

The paper, referred to as Adversarial Machine Studying: A Taxonomy and Terminology of Assaults and Mitigations, was written in collaboration with academia and trade. It paperwork the various kinds of adversarial machine studying (AML) assaults and a few mitigation strategies.

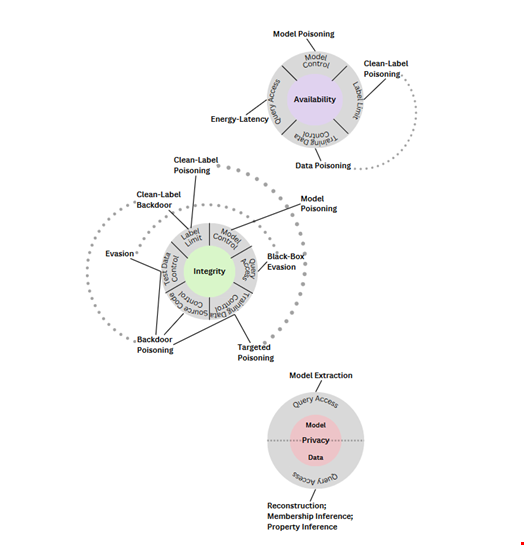

In its taxonomy, NIST broke down AML assaults into two classes:

- Assaults focusing on ‘predictive AI’ methods

- Assaults focusing on ‘generative AI’ methods

What NIST calls ‘predictive AI’ refers to a broad understanding of AI and machine studying methods that predict behaviors and phenomena. An instance of such methods could be present in pc imaginative and prescient units or self-driving vehicles.

‘Generative AI,’ in NIST taxonomy, is a sub-category inside ‘predictive AI,’ which incorporates generative adversarial networks, generative pre-trained transformers and diffusion fashions.

“Whereas many assault varieties within the PredAI taxonomy apply to GenAI […], a considerable physique of latest work on the safety of GenAI deserves explicit deal with novel safety violations,” reads the paper.

Evasion, Poisoning and Privateness Assaults

For ‘predictive AI’ methods, the report considers three kinds of assaults:

- Evasion assaults, during which the adversary’s purpose is to generate adversarial examples, that are outlined as testing samples whose classification could be modified at a deployment time to an arbitrary class of the attacker’s selection with solely minimal perturbation

- Poisoning assaults, referring to adversarial assaults performed through the coaching stage of the AI algorithm

- Privateness assaults, makes an attempt to be taught delicate details about the AI or the info it was educated on so as to misuse it

Alina Oprea, a professor at Northeastern College and one of many paper’s co-authors, commented in a public assertion: “Most of those assaults are pretty straightforward to mount and require minimal data of the AI system and restricted adversarial capabilities. Poisoning assaults, for instance, could be mounted by controlling a number of dozen coaching samples, which might be a really small share of the whole coaching set.”

Abusing Generative AI Programs

AML assaults focusing on ‘generative AI’ methods fall underneath a fourth class, which NIST calls abuse assaults. They contain the insertion of incorrect info right into a supply, similar to a webpage or on-line doc, that an AI then absorbs.

Not like poisoning assaults, abuse assaults try to offer the AI incorrect items of data from a official however compromised supply to repurpose the AI system’s meant use.

A few of the talked about abuse assaults embody:

- AI provide chain assaults

- Direct immediate injection assaults

- Oblique immediate injection assaults

Want for Extra Complete Mitigation Methods

The authors offered some mitigation strategies and approaches for every of those classes and sub-categories of assaults.

Nonetheless, Apostol Vassilev, a pc scientist at NIST and one of many co-authors, admitted that they’re nonetheless largely inadequate.

“Regardless of the numerous progress AI and machine studying have made, these applied sciences are weak to assaults that may trigger spectacular failures with dire penalties. There are theoretical issues with securing AI algorithms that merely haven’t been solved but. If anybody says in another way, they’re promoting snake oil,” he mentioned.

“We […] describe present mitigation methods reported within the literature, however these obtainable defenses presently lack sturdy assurances that they absolutely mitigate the dangers. We’re encouraging the group to give you higher defenses.”

NIST’s Effort to Assist the Growth of Reliable AI

The publication of this paper comes three months after the discharge of Joe Biden’s Government Order on Protected, Safe, and Reliable Growth and Use of Synthetic Intelligence (EO 14110). The EO tasked NIST to assist the event of reliable AI.

The taxonomy launched within the NIST paper may even function a foundation to place into follow NIST’s AI Danger Administration Framework, which was first launched in January 2023.

In November 2023 on the UK’s AI Security Summit, US Vice-President Kamala Harris introduced the creation of a brand new entity inside NIST, the US AI Security Institute.

The Institute’s mission is to facilitate the event of requirements for the security, safety, and testing of AI fashions, develop requirements for authenticating AI-generated content material, and supply testing environments for researchers to judge rising AI dangers and tackle identified impacts.

The UK additionally inaugurated its personal AI Security Institute through the summit.

Learn extra: AI Security Summit: OWASP Urges Governments to Agree on AI Safety Requirements