On Might 28 on the COMPUTEX convention in Taipei, NVIDIA introduced a bunch of recent {hardware} and networking instruments, many centered round enabling synthetic intelligence. The brand new lineup consists of the 1-exaflop supercomputer, the DGX GH200 class; over 100 system configuration choices designed to assist firms host AI and high-performance computing wants; a modular reference structure for accelerated servers; and a cloud networking platform constructed round Ethernet-based AI clouds.

The bulletins — and the primary public discuss co-founder and CEO Jensen Huang has given because the begin of the COVID-19 pandemic — helped propel NVIDIA in sight of the coveted $1 trillion market capitalization. Doing so would make it the primary chipmaker to ascend to inside the realm of tech giants like Microsoft and Apple.

Bounce to:

What makes the DGX GH200 for AI supercomputers totally different?

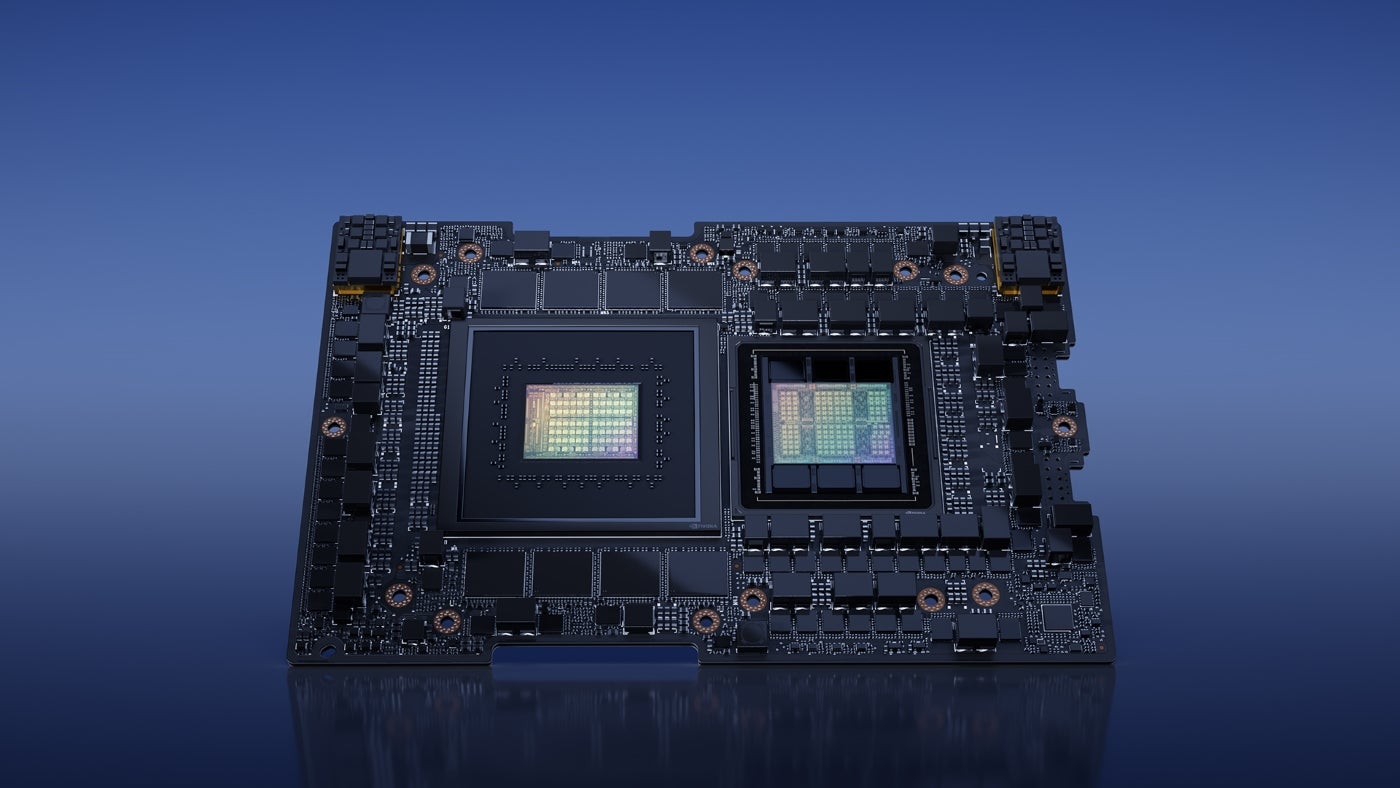

NVIDIA’s new class of AI supercomputers make the most of the GH200 Grace Hopper Superchips, and the NVIDIA NVLink Swap System interconnect to run generative AI language purposes, recommender methods (machine studying engines for predicting what a person would possibly price a product or piece of content material), and knowledge analytics workloads (Determine A). It’s the primary product to make use of each the high-performance chips and the novel interconnect.

Determine A

NVIDIA will supply the DGX GH200 to Google Cloud, Meta and Microsoft first. Subsequent, it plans to supply the DGX GH200 design as a blueprint to cloud service suppliers and different hyperscalers. It’s anticipated to be accessible by the top of 2023.

The DGX GH200 is meant to let organizations run AI from their very own knowledge facilities. 256 GH200 superchips in every unit present 1 exaflop of efficiency and 144 terabytes of shared reminiscence.

NVIDIA defined within the announcement that the NVLink Swap System allows the GH200 chips to bypass a traditional CPU-to-GPU PCIe connection, growing the bandwidth whereas decreasing energy consumption.

Mark Lohmeyer, vice chairman of compute at Google Cloud, identified in an NVIDIA press launch that the brand new Hopper chips and NVLink Swap System can “handle key bottlenecks in large-scale AI.”

“Coaching giant AI fashions is historically a resource- and time-intensive activity,” stated Girish Bablani, company vice chairman of Azure infrastructure at Microsoft, within the NVIDIA press launch. “The potential for DGX GH200 to work with terabyte-sized datasets would enable builders to conduct superior analysis at a bigger scale and accelerated speeds.”

NVIDIA may even hold some supercomputing functionality for itself; the corporate plans to work by itself supercomputer referred to as Helios, powered by 4 DGX GH200 methods.

Alternate options to NVIDIA’s supercomputing chips

There aren’t many firms or clients aiming for the AI and supercomputing speeds NVIDIA’s Grace Hopper chips allow. NVIDIA’s main rival is AMD, which produces the Intuition MI300. This chip consists of each CPU and GPU cores, and is predicted to run the two exaflop El Capitan supercomputer.

Intel supplied the Falcon Shores chip, however it not too long ago introduced that this is able to not be popping out with each a CPU and GPU. As an alternative, it has modified the roadmap to concentrate on AI and high-powered computing, however not embrace CPU cores.

Enterprise library helps AI deployments

One other new service, the NVIDIA AI Enterprise library, is designed to assist organizations entry the software program layer of the brand new AI choices. It consists of greater than 100 frameworks, pretrained fashions and growth instruments. These frameworks are applicable for the event and deployment of manufacturing AI together with generative AI, pc imaginative and prescient, speech AI and others.

On-demand assist from NVIDIA AI specialists shall be accessible to assist with deploying and scaling AI tasks. It might assist deploy AI on knowledge middle platforms from VMware and Purple Hat or on NVIDIA-Licensed Methods.

SEE: Are ChatGPT or Google Bard proper for what you are promoting?

Sooner networking for AI within the cloud

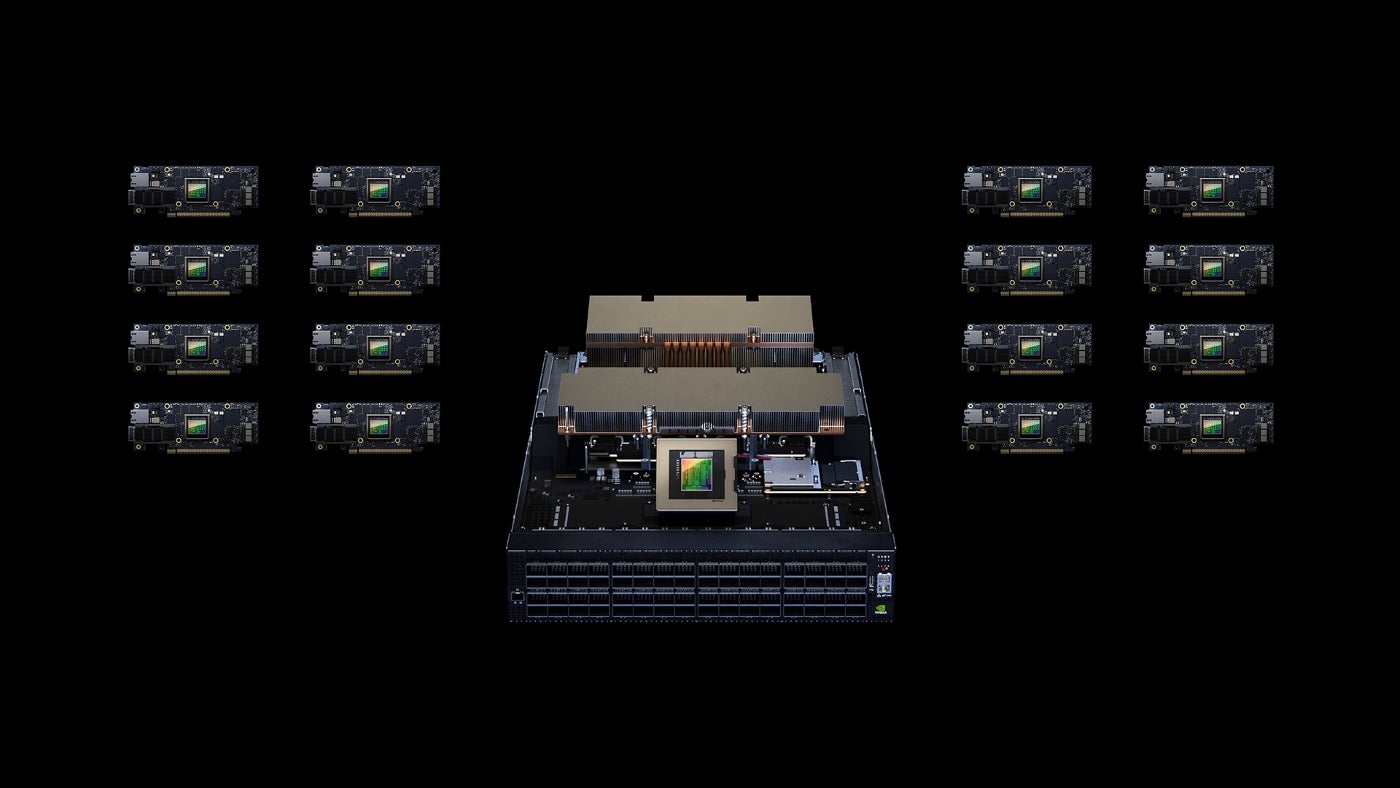

NVIDIA desires to assist velocity up Ethernet-based AI clouds with the accelerated networking platform Spectrum-X (Determine B).

Determine B

“NVIDIA Spectrum-X is a brand new class of Ethernet networking that removes limitations for next-generation AI workloads which have the potential to rework complete industries,” stated Gilad Shainer, senior vice chairman of networking at NVIDIA, in a press launch.

Spectrum-X can assist AI clouds with 256 200Gbps ports related by a single change or 16,000 ports in a two-tier spine-leaf topology.

Spectrum-X does so by using Spectrum-4, a 51Tbps Ethernet change constructed particularly for AI networks. Superior RoCE extensions bringing collectively the Spectrum-4 switches, BlueField-3 DPUs and NVIDIA LinkX optics create an end-to-end 400GbE community optimized for AI clouds, NVIDIA stated.

Spectrum-X and its associated merchandise (Spectrum-4 switches, BlueField-3 DPUs and 400G LinkX optics) can be found now, together with ecosystem integration with Dell Applied sciences, Lenovo and Supermicro.

MGX Server Specification coming quickly

In additional information relating to accelerated efficiency in knowledge facilities, NVIDIA has launched the MGX server specification. It’s a modular reference structure for system producers engaged on AI and high-performance computing.

“We created MGX to assist organizations bootstrap enterprise AI,” stated Kaustubh Sanghani, vice chairman of GPU merchandise at NVIDIA, in a press launch.

Producers will be capable of specify their GPU, DPU and CPU preferences inside the preliminary, primary system structure. MGX is suitable with present and future NVIDIA server type elements, together with 1U, 2U, and 4U (air or liquid cooled).

SoftBank is now engaged on constructing a community of information facilities in Japan which can use the GH200 Superchips and MGX methods for5G providers and generative AI purposes.

QCT and Supermicro have adopted MGX and could have it available on the market in August.

What is going to change about knowledge middle administration?

For companies, including high-performance computing or AI to knowledge facilities would require adjustments to bodily infrastructure designs and methods. Whether or not and the way a lot to take action is dependent upon the person scenario. Joe Reele, vice chairman, answer architects at Schneider Electrical, stated many bigger organizations are already on their option to making their knowledge facilities prepared for AI and machine studying.

“Energy density and warmth dissipation are the drivers behind this transition,” Reele stated in an e-mail to TechRepublic. “Moreover, the best way the IT equipment is architected for AI/ML within the white area is a driver as properly when contemplating the necessity for issues corresponding to shorter cable runs and clustering.”

Operators of enterprise-owned knowledge facilities ought to resolve based mostly on their enterprise priorities whether or not changing servers and upgrading IT tools to assist generative AI workloads is sensible for them, Reele stated.

“Sure, new servers shall be extra environment friendly and pack extra punch in the case of compute energy, however operators should contemplate components corresponding to compute utilization, carbon emissions, and naturally area, energy, and cooling. Whereas some operators might have to regulate their server infrastructure methods, many won’t have to make these huge updates within the close to time period,” he stated.

Different information from NVIDIA at COMPUTEX

NVIDIA introduced a wide range of different new services and products based mostly round synthetic intelligence:

- WPP and NVIDIA Omniverse got here collectively to announce a brand new engine for advertising and marketing. The content material engine will be capable of generate video and pictures for promoting.

- A sensible manufacturing platform, Metropolis for Factories, can create and handle customized quality-control methods.

- The Avatar Cloud Engine (ACE) for Video games is a foundry service for online game builders. It allows animated characters to name on AI for speech technology and animation.