New AI instruments provide simpler and sooner methods for folks to get their jobs performed — together with cybercriminals. AI makes launching automated assaults extra environment friendly and accessible.

You’ve got seemingly heard of a number of methods menace actors are utilizing ChatGPT and different AI instruments for nefarious functions. For instance, it has been proved that generative AI can write profitable phishing emails, establish targets for ransomware, and conduct social engineering. However what you most likely have not heard is how attackers are exploiting AI expertise to instantly evade enterprise safety defenses.

Whereas there are insurance policies that limit the misuse of those AI platforms, cybercriminals have been busy determining find out how to circumvent these restrictions and safety protections.

Jailbreaking ChatGPT Plus and Bypassing ChatGPT’s API Protections

Dangerous actors are jailbreaking ChatGPT Plus so as to use the ability of GPT-4 totally free with out all the restrictions and guardrails that try to stop unethical or unlawful use.

Kasada’s analysis staff has uncovered that individuals are additionally gaining unauthorized entry to ChatGPT’s API by exploiting GitHub repositories, like these discovered on the GPT jailbreaks Reddit thread, to remove geofencing and different account limitations.

Credential-stuffing configs can be modified with ChatGPT if customers discover the appropriate OpenAI bypasses from sources like GitHub’s gpt4free, which methods OpenAI’s API into believing it is receiving a official request from web sites with paid OpenAI accounts, reminiscent of You.com.

These sources make it doable for fraudsters to not solely launch profitable account takeover (ATO) assaults towards ChatGPT accounts but in addition to make use of jailbroken accounts to help with fraud schemes throughout different websites and functions.

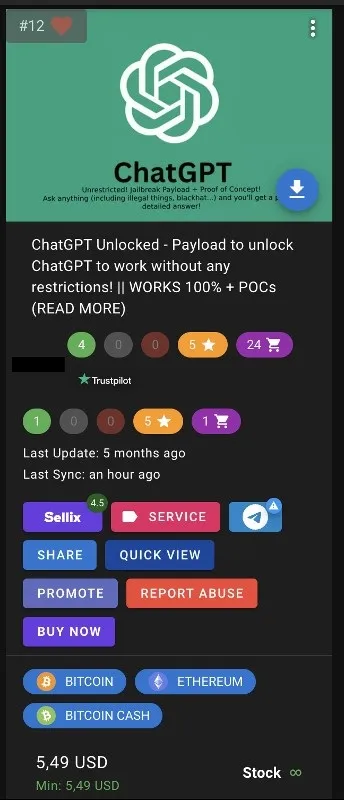

Jailbroken and stolen ChatGPT Plus accounts are actively being purchased and bought on the Darkish Internet and different marketplaces and boards. Kasada researchers have discovered stolen ChatGPT Plus accounts on the market priced as little as $5, which is, successfully, a 75% low cost.

Stolen ChatGPT accounts have main penalties for account homeowners and different web sites and functions. For starters, when menace actors achieve entry to a ChatGPT account, they will view the account’s question historical past, which can embody delicate data. Moreover, dangerous actors can simply change account credentials, making the unique proprietor lose all entry.

Extra critically, it additionally units the stage for additional, extra refined fraud to happen, because the guardrails are eliminated with jailbroken accounts, making it simpler for cybercriminals to leverage the ability of AI to hold out refined focused automated assaults on enterprises.

Bypassing CAPTCHAs with AI

One other means menace actors are utilizing AI to take advantage of enterprise defenses is by evading CAPTCHAs. Whereas CAPTCHAs are universally hated, they nonetheless safe 2.5 million — greater than one-third — of all Web websites.

New developments in AI make it simple for cybercriminals to make use of AI to bypass CAPTCHAs. ChatGPT admitted that it might clear up a CAPTCHA, and Microsoft just lately introduced an AI mannequin that may clear up visible puzzles.

Moreover, websites that depend on CAPTCHAs are more and more inclined to at present’s refined bots that may bypass them with ease via AI-assisted CAPTCHA solvers, reminiscent of CaptchaAI, which are cheap and simple to search out, posing a major menace to on-line safety.

Conclusion

Even with strict insurance policies in place to attempt to forestall abuse on AI platforms, dangerous actors are discovering artistic methods to weaponize AI to launch assaults at scale. As defenders, we want larger consciousness, collaborative efforts, and strong safety designed to successfully struggle AI-powered cyber threats, which can proceed to evolve and advance at a sooner tempo than ever earlier than.