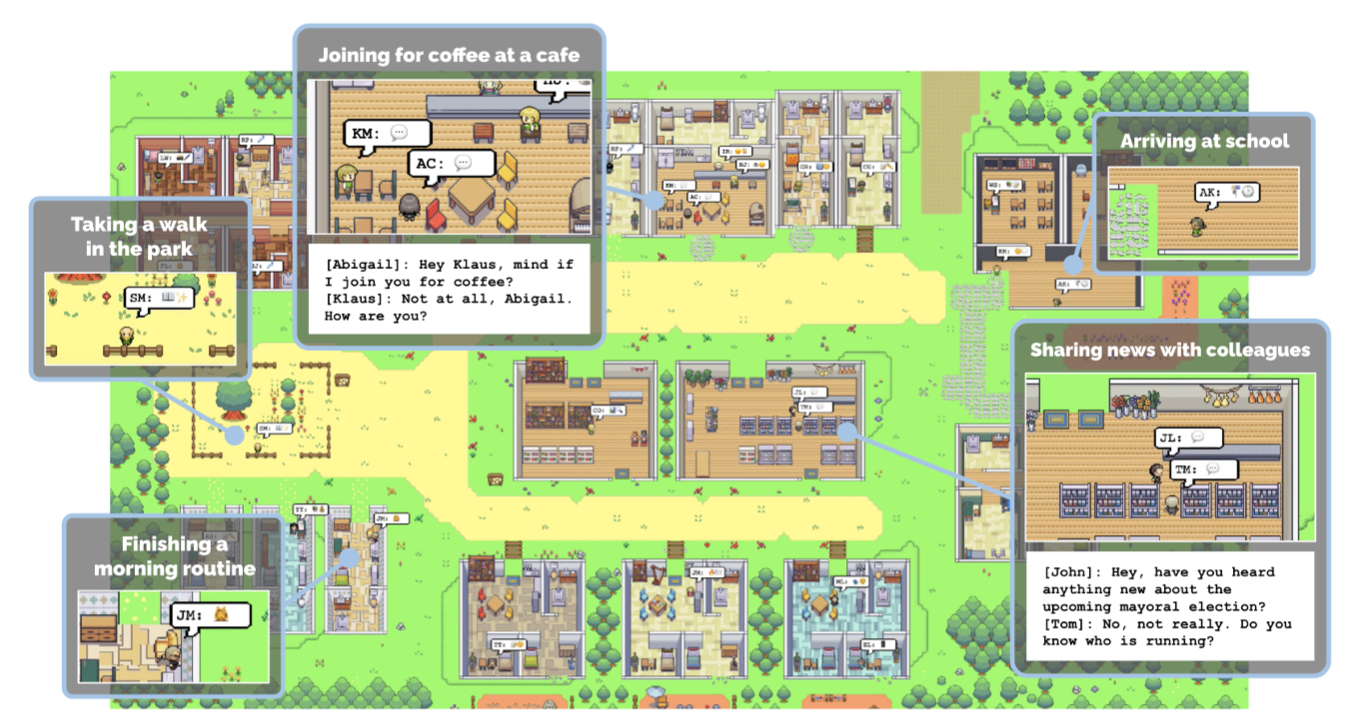

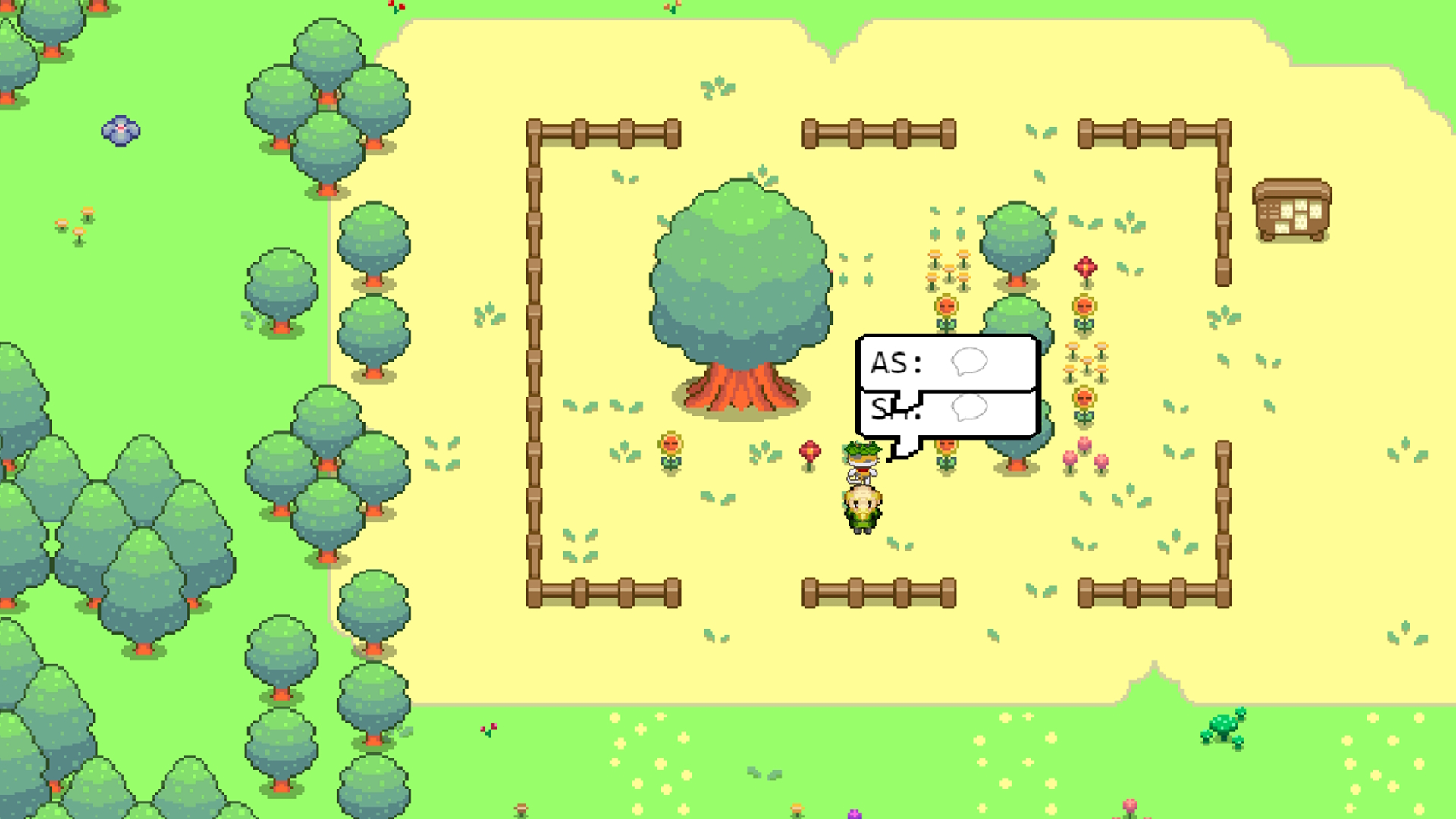

Final week, a few us had been briefly captivated by the simulated lives of “generative brokers” created by researchers from Stanford and Google. Led by PhD pupil Joon Sung Park (opens in new tab), the analysis group populated a pixel artwork world with 25 NPCs whose actions had been guided by ChatGPT and an “agent structure that shops, synthesizes, and applies related recollections to generate plausible habits.” The end result was each mundane and compelling.

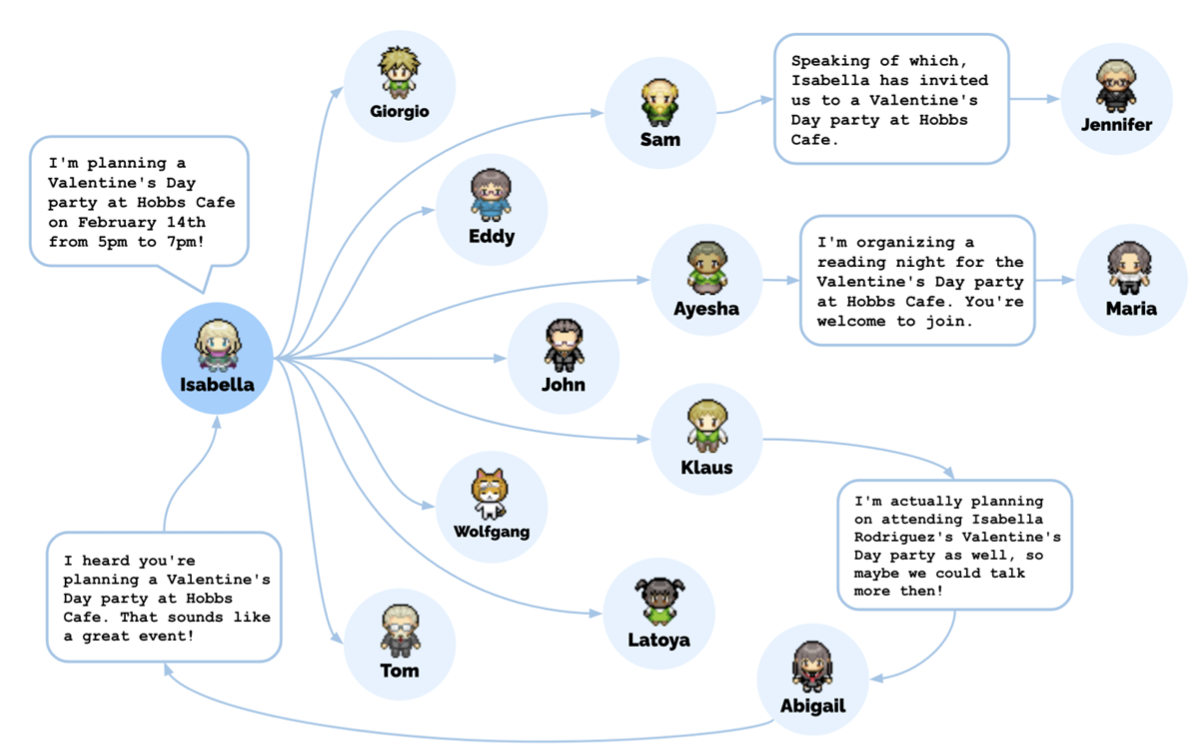

One of many brokers, Isabella, invited a few of the different brokers to a Valentine’s Day occasion, for example. As phrase of the occasion unfold, new acquaintances had been made, dates had been arrange, and finally the invitees arrived at Isabella’s place on the appropriate time. Not precisely riveting stuff, however all that habits started as one “user-specified notion” that Isabella needed to throw a Valentine’s Day occasion. The exercise that emerged occurred between the big language mannequin, agent structure, and an “interactive sandbox atmosphere” impressed by The Sims. Giving Isabella a unique notion, like that she needed to punch everybody within the city, would’ve led to a completely totally different sequence of behaviors.

Together with different simulation functions, the researchers suppose their mannequin could possibly be used to “underpin non-playable recreation characters that may navigate advanced human relationships in an open world.”

The challenge jogs my memory a little bit of Maxis’ doomed 2013 SimCity reboot, which promised to simulate a metropolis right down to its particular person inhabitants with 1000’s of crude little brokers that drove to and from work and frolicked at parks. A model of SimCity that used these much more superior generative brokers can be enormously advanced, and never attainable in a videogame proper now by way of computational value. However Park would not suppose it is far-fetched to think about a future recreation working at that stage.

The complete paper, titled “Generative Brokers: Interactive Simulacra of Human Conduct,” is obtainable right here (opens in new tab), and likewise catalogs flaws of the challenge—the brokers have a behavior of embellishing, for instance—and moral issues.

Under is a dialog I had with Park concerning the challenge final week. It has been edited for size and readability.

PC Gamer: We’re clearly concerned about your challenge because it pertains to recreation design. However what led you to this analysis—was it video games, or one thing else?

Joon Sung Park: There’s form of two angles on this. One is that this concept of making brokers that exhibit actually plausible habits has been one thing that our discipline has dreamed about for a very long time, and it is one thing that we form of forgot about, as a result of we realized it is too tough, that we did not have the appropriate ingredient that might make it work.

Can we create NPC brokers that behave in a sensible method? And which have long-term coherence?

Joon Sung Park

What we acknowledged when the big language mannequin got here out, like GPT-3 a couple of years again, and now ChatGPT and GPT-4, is that these fashions which are skilled on uncooked information from the social net, Wikipedia, and principally the web, have of their coaching information a lot about how we behave, how we discuss to one another, and the way we do issues, that if we poke them on the proper angle, we will really retrieve that info and generate plausible habits. Or principally, they grow to be the form of elementary blocks for constructing these sorts of brokers.

So we tried to think about, ‘What’s the most excessive, on the market factor that we may presumably do with that concept?’ And our reply got here out to be, ‘Can we create NPC brokers that behave in a sensible method? And which have long-term coherence?’ That was the final piece that we undoubtedly needed in there in order that we may really discuss to those brokers and so they keep in mind one another.

One other angle is that I believe my advisor enjoys gaming, and I loved gaming once I was youthful—so this was at all times form of like our childhood dream to some extent, and we had been to present it a shot.

I do know you set the ball rolling on sure interactions that you simply needed to see occur in your simulation—just like the occasion invites—however did any behaviors emerge that you simply did not foresee?

There’s some delicate issues in there that we did not foresee. We did not count on Maria to ask Klaus out. That was form of a enjoyable factor to see when it really occurred. We knew that Maria had a crush on Klaus, however there was no assure that quite a lot of these items would really occur. And principally seeing that occur, the complete factor was form of surprising.

On reflection, even the truth that they determined to have the occasion. So, we knew that Isabella can be there, however the truth that different brokers wouldn’t solely hear about it, however really determine to come back and plan their day round it—we hoped that one thing like that may occur, however when it did occur, that form of stunned us.

It is robust to speak about these items with out utilizing anthropomorphic phrases, proper? We are saying the bots “made plans” or “understood one another.” How a lot sense does it make to speak like that?

Proper. There is a cautious line that we’re attempting to stroll right here. My background and my group’s background is the tutorial discipline. We’re students on this discipline, and we view our function as to be as grounded as we may be. And we’re extraordinarily cautious about anthropomorphizing these brokers or any form of computational brokers normally. So after we say these brokers “plan” and “mirror,” we point out this extra within the sense {that a} Disney character is planning to attend a celebration, proper? As a result of we will say “Mickey Mouse is planning a tea occasion” with a transparent understanding that Mickey Mouse is a fictional character, an animated character, and nothing past that. And after we say these brokers “plan,” we imply it in that sense, and fewer than there’s really one thing deeper happening. So you possibly can principally think about these caricatures of our lives. That is what it is meant to be.

There is a distinction between the habits that’s popping out of the language mannequin, after which habits that’s coming from one thing the agent “skilled” on the planet they inhabit, proper? When the brokers discuss to one another, they could say “I slept properly final night time,” however they did not. They don’t seem to be referring to something, simply mimicking what an individual would possibly say in that scenario. So it looks like the perfect purpose is that these brokers are in a position to reference issues that “really” occurred to them within the recreation world. You have used the phrase “coherence.”

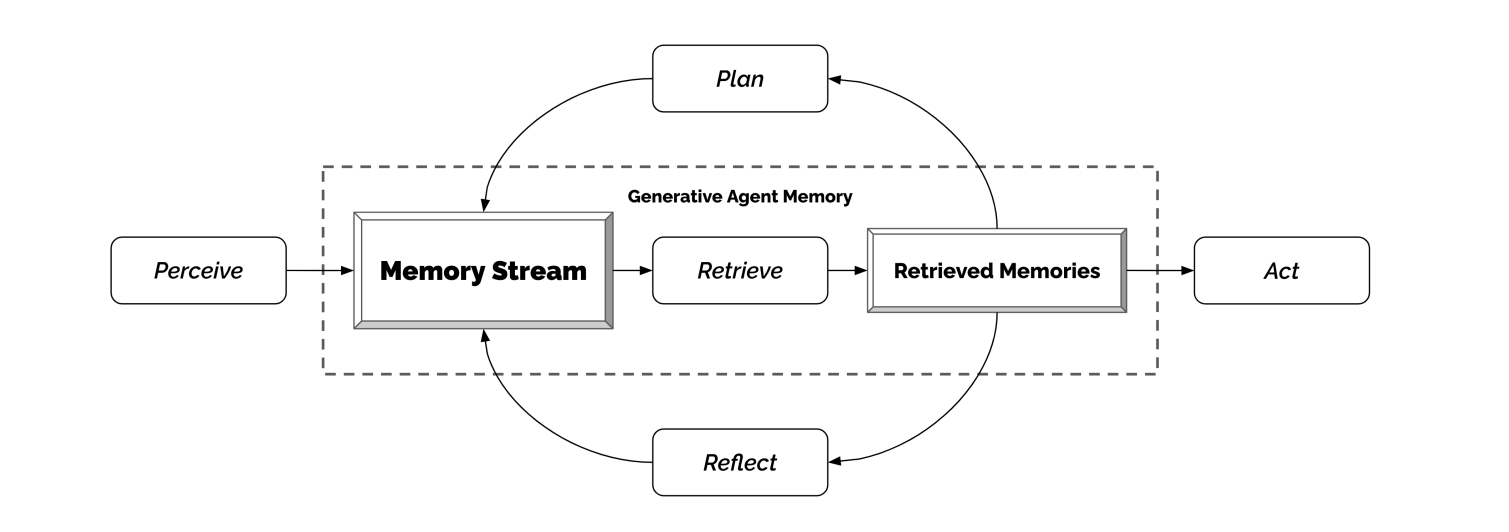

That is precisely proper. The principle problem for an interactive agent, the principle scientific contribution that we’re making with this, is this concept. The principle problem that we try to beat is that these brokers understand an unimaginable quantity of their expertise of the sport world. So should you open up any of the state particulars and see all of the issues they observe, and all of the issues they “take into consideration,” it is so much. For those who had been to feed all the pieces to a big language mannequin, even at this time with GPT-4 with a very massive context window, you possibly can’t even slot in half a day in that context window. And with ChatGPT, not even, I would say, an hour value of content material.

So, it is advisable to be extraordinarily cautious about what you feed into your language mannequin. You should convey down the context into the important thing highlights which are going to tell the agent within the second the very best. After which use that to feed into a big language mannequin. In order that’s the principle contribution we’re attempting to make with this work.

What sort of context information are the brokers perceiving within the recreation world? Greater than their location and dialog with different NPCs? I am stunned by the quantity of knowledge you are speaking about right here.

So, the notion these brokers have is designed fairly merely: it is principally their imaginative and prescient. To allow them to understand all the pieces inside a sure radius, and every agent, together with themselves, so that they make quite a lot of self-observation as properly. So, as an example if there is a Joon Park agent, then I would be not solely observing Tyler on the opposite facet of the display, however I would even be observing Joon Park speaking to Tyler. So there’s quite a lot of self-observation, remark of different brokers, and the area additionally has states the agent observes.

Lots of the states are literally fairly easy. So as an example there is a cup. The cup is on the desk. These brokers will simply say, ‘Oh, the cup is simply idle.’ That is the time period that we use to imply ‘it is doing nothing.’ However all of these states will go into their recollections. And there is quite a lot of issues within the atmosphere, it is fairly a wealthy atmosphere that these brokers have. So all that goes into their reminiscence.

So think about should you or I had been generative brokers proper now. I need not keep in mind what I ate final Tuesday for breakfast. That is doubtless irrelevant to this dialog. However what could be related is the paper I wrote on generative brokers. So that should get retrieved. In order that’s the important thing perform of generative brokers: Of all this info that they’ve, what’s essentially the most related one? And the way can they discuss that?

Relating to the concept these could possibly be future videogame NPCs, would you say that any of them behaved with a definite character? Or did all of them form of converse and act in roughly the identical manner?

There’s form of two solutions to this. They had been designed to be very distinct characters. And every of them had totally different experiences on this world, as a result of they talked to totally different individuals. In case you are with a household, the individuals you doubtless discuss to most is your loved ones. And that is what you see in these brokers, and that influenced their future habits.

Will we wish to create fashions that may generate unhealthy content material, poisonous content material, for plausible simulation?

Joon Sung Park

So, they begin with distinct identities. We give them some character description, in addition to their occupation and present relationship in the beginning. And that enter that principally bootstraps their reminiscence, and influences their future habits. And their future habits influences extra future habits. So these brokers, what they keep in mind and what they expertise is extremely distinct, and so they make selections primarily based on what they expertise. In order that they find yourself behaving very otherwise.

I assume on the easiest stage: should you’re a instructor, you go to high school, should you’re a pharmacy clerk, you go to the pharmacy. However it is also the best way you discuss to one another, what you discuss, all these modifications primarily based on how these brokers are outlined and what they expertise.

Now, there are the boundary instances or form of limitations with our present strategy, which makes use of ChatGPT. ChatGPT was wonderful tuned on human preferences. And OpenAI has completed quite a lot of exhausting work to make these brokers be prosocial, and never poisonous. And partly, that is as a result of ChatGPT and generative brokers have a unique purpose. ChatGPT is attempting to grow to be actually a great tool that’s for those who minimizes the danger as a lot as attainable. In order that they’re actively attempting to make this mannequin not do sure issues. Whereas should you’re attempting to create this concept of believability, people do have battle, and we’ve arguments, and people are part of our plausible expertise. So you’ll need these in there. And that’s much less represented in generative brokers at this time, as a result of we’re utilizing the underlying mannequin, ChatGPT. So quite a lot of these brokers come out to be very well mannered, very collaborative, which in some instances is plausible, however it could go somewhat bit too far.

Do you anticipate a future the place we’ve bots skilled on every kind of various language units? Ignoring for now the issue of gathering coaching information or licensing it, would you think about, say, a mannequin primarily based on cleaning soap opera dialogue, or different issues with extra battle?

There is a little bit of a coverage angle to this, and form of what we, as a society and neighborhood determine is the appropriate factor to do right here is. From the technical angle, sure, I believe we’ll have the flexibility to coach these fashions extra shortly. And we already are seeing individuals or smaller teams apart from OpenAI, having the ability to replicate these massive fashions to a stunning diploma. So we can have I believe, to some extent, that skill.

Now, will we really try this or determine as a society that it is a good suggestion or not? I believe it is a bit of an open query. In the end, as teachers—and I believe this isn’t only for this challenge, however any form of scientific contribution that we make—the upper the affect, the extra we care about its factors of failures and dangers. And our basic philosophy right here is determine these dangers, be very clear about them, and suggest construction and rules that may assist us mitigate these dangers.

I believe that is a dialog that we have to begin having with quite a lot of these fashions. And we’re already having these conversations, however the place they’re going to land, I believe it is a bit of an open query. Will we wish to create fashions that may generate unhealthy content material, poisonous content material, for plausible simulation? In some instances, the profit might outweigh the potential harms. In some instances, it might not. And that is a dialog that I am definitely engaged with proper now with my colleagues, but in addition it isn’t essentially a dialog that anybody researcher ought to be deciding on.

Considered one of your moral concerns on the finish of the paper was the query of what to do about individuals creating parasocial relationships with chatbots, and we have really already reported on an occasion of that. In some instances it seems like our important reference level for that is already science fiction. Are issues shifting sooner than you’ll have anticipated?

Issues are altering in a short time, even for these within the discipline. I believe that half is completely true. We’re hopeful that quite a lot of the actually necessary moral discussions we will have, and at the least begin to have some tough rules round how you can take care of these issues. However no, it’s shifting quick.

It’s fascinating that we finally determined to refer again to science fiction films to essentially discuss a few of these moral issues. There was an fascinating second, and perhaps this does illustrate this level somewhat bit: we felt strongly that we wanted an moral portion within the paper, like what are the dangers and whatnot, however as we had been fascinated with that, however the issues that we first noticed was simply not one thing that we actually talked about in educational neighborhood at that time. So there wasn’t any literature per se that we may refer again to. In order that’s after we determined, you recognize, we would simply have to take a look at science fiction and see what they do. That is the place these sorts of issues received referred to.

And I believe I believe you are proper. I believe that we’re attending to that time quick sufficient that we at the moment are relying to some extent on the creativity of those fiction writers. Within the discipline of human laptop interplay, there may be really what’s known as a “generative fiction.” So there are literally individuals engaged on fiction for the aim of foreseeing potential risks. So it is one thing that we respect. We’re shifting quick. And we’re very a lot desperate to suppose deeply about these questions.

You talked about the subsequent 5 to 10 years there. Folks have been engaged on machine studying for some time now, however once more, from the lay perspective at the least, it looks like we’re all of the sudden being confronted with a burst of development. Is that this going to decelerate, or is it a rocket ship?

What I believe is fascinating concerning the present period is, even those that are closely concerned within the improvement of those items of know-how are usually not so clear on what the reply to your query is. So, I am saying that is really fairly fascinating. As a result of should you look again, as an example, 40 or 50 years, or we’re after we’re constructing transistors for the primary few many years, and even at this time, we even have a really clear eye on how briskly issues will progress. Now we have Moore’s Regulation, or we’ve a sure understanding that, at each occasion, that is how briskly issues will advance.

I believe within the paper, we talked about various like 1,000,000 brokers. I believe we will get there.

Joon Sung Park

What is exclusive about what we’re seeing at this time, I believe, is that quite a lot of the behaviors or capacities of AI programs are emergent, which is to say, after we first began constructing them, we simply did not suppose that these fashions or programs would try this, however we later discover that they’re able to accomplish that. And that’s making it somewhat bit harder, even for the scientific neighborhood, to essentially have a transparent prediction on what the subsequent 5 years goes to appear like. So my trustworthy reply is, I am unsure.

Now, there are specific issues that we will say. And that usually is throughout the scope of what I might say are optimization and efficiency. So, working 25 brokers at this time took a good quantity of sources and time. It is not a very low-cost simulation to run even at that scale. What I can say is, I believe inside a yr, there are going to be some, maybe video games or functions, which are impressed by candidate brokers. In two to 3 years, there could be some functions that make a critical try at creating one thing like generative brokers in a extra industrial sense. I believe in 5 to 10 years, it is going to be a lot simpler to create these sorts of functions. Whereas at this time, on day one, even inside a scope of 1 or two years, I believe it is going to be a stretch to get there.

Now, within the subsequent 30 years, I believe it could be attainable that computation can be low-cost sufficient that we will create an agent society with greater than 25 brokers. I believe within the paper, we talked about various like 1,000,000 brokers. I believe we will get there, and I believe these predictions are barely simpler for a pc scientist to make, as a result of it has extra to do with the computational energy. So these are the issues that I believe I can say for now. However by way of what AI will do? Onerous to say.